1. Introduction

The fluctuation–dissipation theorems (FDTs) were given their firm basis by the work of Callen & Welton (Reference Callen and Welton1951), Green (Reference Green1954) and Kubo (Reference Kubo1966) in the 1950s and 1960s. They are well known from their frequent use in homogeneous systems to find diffusion coefficients (Liu, Vlugt & Bardow Reference Liu, Vlugt and Bardow2011) or thermal conductivities (Bresme & Armstrong Reference Bresme and Armstrong2014). Porous media form an exception in this context. A theoretical analysis of fluctuations in porous media was given by Bedeaux & Kjelstrup (Reference Bedeaux and Kjelstrup2021) followed by an experimental study by Alfazazi et al. (Reference Alfazazi, Bedeaux, Kjelstrup, Moura, Ebadi, Mostaghimi, McClure and Armstrong2024).

The FDT was originally formulated for systems in equilibrium (Callen & Welton Reference Callen and Welton1951; Reference GreenGreen 1954; Kubo Reference Kubo1966) and is therefore most commonly used in such systems. The fluctuations leading to the Einstein relation can be taken as a central example for the determination of diffusion coefficients (zero frequency time correlations). The FDT can also be formulated for fluctuations of dissipative fluxes in driven systems. This was discussed in detail in the book by de Zarate & Sengers (Reference de Zarate and Sengers2006). The FDT remains valid for these fluxes, but long-range hydrodynamic fluctuations of, for instance, the fluid density appear when a temperature gradient is applied (static space correlations). Experimental evidence was presented by de Zarate & Sengers (Reference de Zarate and Sengers2006). Recent work by Pelargonio & Zaccone (Reference Pelargonio and Zaccone2023) has shown that under shear flow, the fluctuations exhibit strong non-Markovian behaviour, an indication of memory effects, particularly at higher shear rates, leading to non-trivial modifications of the FDT.

In their work, Bedeaux & Kjelstrup (Reference Bedeaux and Kjelstrup2021) gave theoretical expressions for the time and space correlations of the volume fluxes of fluids in a porous medium. Winkler et al. (Reference Winkler, Gjennestad, Bedeaux, Kjelstrup, Cabriolu and Hansen2020) made a first network simulation to determine these correlations. A symmetry in the correlations was established, but a link was not made to either Onsager coefficients or experimental work. The first such link for zero frequency time correlation functions was made by Alfazazi et al. (Reference Alfazazi, Bedeaux, Kjelstrup, Moura, Ebadi, Mostaghimi, McClure and Armstrong2024). The present work aims to develop another experimental procedure for the study of flux fluctuations for two-phase flow in a Hele-Shaw cell. We shall derive expressions and report experiments on zero frequency time correlations in the system and relate the correlations to the Onsager coefficients.

The Onsager coefficients are uniquely connected to the entropy production of a flow system. Therefore, they can be used to describe the energy dissipated by the flow. Their quantitative measure in homogeneous systems is essential for understanding the flow mechanism and a basis for flow optimisation (see, for instance, Kjelstrup et al. Reference Kjelstrup, Bedeaux, Johannessen, Gross and Wilhelmsen2024). For homogeneous systems, the formulae have proven useful for the determination of transport coefficients (Kubo Reference Kubo1966; Carbogno, Ramprasad & Scheffler Reference Carbogno, Ramprasad and Scheffler2017). For heterogeneous flow systems, they have hardly been explored, but we anticipate that the usefulness is equally large. Recent research has explored the connection between Onsager coefficients and the measurement of relative permeability coefficients that govern flow at the Darcy scale. For instance, in their recent work, Alfazazi et al. (Reference Alfazazi, Bedeaux, Kjelstrup, Moura, Ebadi, Mostaghimi, McClure and Armstrong2024) showed how the Green–Kubo formulation of the fluctuation–dissipation theorem can be applied to multiphase flow in porous media. Their results demonstrate that transport coefficients derived from flow fluctuations can closely align with effective phase mobilities obtained from Darcy’s law, reinforcing the idea that microscale fluctuations can inform us about macroscopic transport properties. This work contributes to a growing body of literature aiming to bridge the gap between microscopic thermodynamic principles and macroscopic flow properties in porous materials.

The assumption of local equilibrium in the representative elementary volume (REV) of a porous medium plays a central role in the development of a continuum theory for the related transport phenomena (Kjelstrup et al. Reference Kjelstrup, Bedeaux, Hansen, Hafskjold and Galteland2018, Reference Kjelstrup, Bedeaux, Hansen, Hafskjold and Galteland2019). The FDT is here formulated in terms of the fluctuating fluxes (Bedeaux & Kjelstrup Reference Bedeaux and Kjelstrup2021; Bedeaux, Kjelstrup & Schnell Reference Bedeaux, Kjelstrup and Schnell2024) that apply to an REV in local equilibrium. Velocity fluctuations in multiphase flows in porous media can encode information about the medium’s hydraulic permeability. Porous media permeabilities are extensively used for description of flows on the continuum level and are traditionally found from a plot of the steady-state volumetric flow rate versus an effective pressure gradient (Bear Reference Bear1972). Alternative methods are of interest.

Flow in porous media typically involves a series of general phenomena giving rise to fluctuations, such as ganglion dynamics (Avraam & Payatakes Reference Avraam and Payatakes1995; Tallakstad et al. Reference Tallakstad, Knudsen, Ramstad, Løvoll, Måløy, Toussaint and Flekkøy2009; Rücker et al. Reference Rücker2015; Sinha et al. Reference Sinha, Bender, Danczyk, Keepseagle, Prather, Bray, Thrane, Seymour, Codd and Hansen2017; Eriksen et al. Reference Eriksen, Moura, Jankov, Turquet and Måløy2022), Haines jumps (Haines Reference Haines1930; Måløy et al. Reference Måløy, Furuberg, Feder and Jøssang1992; Furuberg, Måløy & Feder Reference Furuberg, Måløy and Feder1996; Moura et al. Reference Moura, Måløy, Flekkøy and Toussaint2017a ,Reference Moura, Måløy and Toussaint b , Spurin et al. Reference Spurin, Rücker, Moura, Bultreys, Garfi, Berg, Blunt and Krevor2022) and intermittent avalanche-like behaviour (Moura et al. Reference Moura, Måløy, Flekkøy and Toussaint2020; Måløy et al. Reference Måløy, Moura, Hansen, Flekkøy and Toussaint2021; Spurin et al. Reference Spurin, Bultreys, Rücker, Garfi, Schlepütz, Novak, Berg, Blunt and Krevor2021; Armstrong et al. Reference Armstrong, Ott, Georgiadis, Rücker, Schwing and Berg2014; Heijkoop et al. Reference Heijkoop, Rieder, Moura, Rücker and Spurin2024). These fluctuations can either be in the pressure signal, phase velocities or pore configurations and lead to the characteristic flow front developments observed (Rothman Reference Rothman1990; Løvoll et al. Reference Løvoll, Méheust, Toussaint, Schmittbuhl and Måløy2004; Zhao, MacMinn & Juanes Reference Zhao, MacMinn and Juanes2016; Primkulov et al. Reference Primkulov, Talman, Khaleghi, Rangriz Shokri, Chalaturnyk, Zhao, MacMinn and Juanes2018; Moura et al. Reference Moura, Måløy, Flekkøy and Toussaint2020; Brodin et al. Reference Brodin, Rikvold, Moura, Toussaint and Måløy2022, Reference Brodin, Pierce, Reis, Rikvold, Moura, Jankov and Måløy2025). It has also been shown that two-phase flows reach a history-independent steady-state (Erpelding et al. Reference Erpelding, Sinha, Tallakstad, Hansen, Flekkøy and Måløy2013) in the sense that macroscopic properties such as the pressure difference across the model and the saturation reach a stationary state, oscillating around well defined mean values. There is also growing evidence that the noise in system variables (i.e. in the pressure) is characteristic for the type of flow, see Alfazazi et al. (Reference Alfazazi, Bedeaux, Kjelstrup, Moura, Ebadi, Mostaghimi, McClure and Armstrong2024) for an overview. We expect the time scales of the correlations to be important, also for fluctuations in extensive variables as those studied here.

In this paper, we outline an experimental technique to measure the Onsager coefficients for two-phase porous media flows via optical measurements of fluctuations in phase saturation. Unlike the method used by Alfazazi et al. (Reference Alfazazi, Bedeaux, Kjelstrup, Moura, Ebadi, Mostaghimi, McClure and Armstrong2024), volumetric fluxes are not measured. We study instead the statistics of the time derivative of the phase saturation and show that under steady-state conditions, this quantity fluctuates around zero (as expected) and follows a Gaussian distribution (at least for small fluctuations). We compute the autocorrelation function of the fluctuations and its integral, which then gives access to the Onsager coefficients for the case of two incompressible fluid phases. We demonstrate two alternative methods of arriving at this quantity, one considering a time average of the whole experimental signal, and another in which we produce a pseudo-ensemble of subexperiments by clipping the time-signal and averaging the results computed for each subexperiment. The results of both techniques should be identical, but offer different perspectives to the problem: while the full time-averaging method is more straightforward and easier to compute, the pseudo-ensemble averaging procedure gives a better understanding of the statistical convergence of the measures and the characteristics of the underlying phenomena.

The paper is divided as follows. In § 2, we present the experimental set-up employed and boundary conditions driving the flows. Section 3 is dedicated to the theoretical framework with the application of the fluctuation–dissipation theorem for porous medium flows. In particular, we show how correlations in saturation can be used to extract the Onsager coefficients. Section 4 shows our experimental results and describes the different approaches to the measurements. Finally, in § 5, we present the conclusions and discuss possible extensions of the work.

2. Description of the experimental set-up

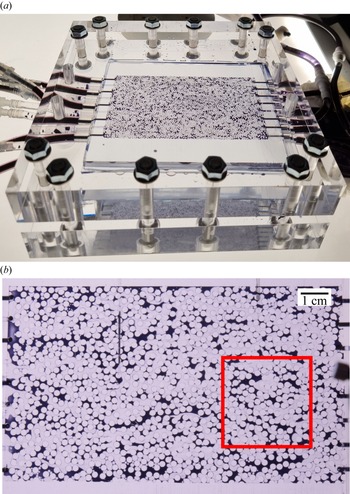

Figure 1. (a) Experimental set-up consisting of a modified Hele-Shaw cell with a 3-D printed porous network. The flow direction is from left to right and the tubes on the left side of the model are connected to two syringe pumps. (b) A snapshot of the process. The red square denotes the approximate field of view of the machine vision camera used for the imaging at higher frame rate.

We have employed a custom-built synthetic transparent network to study the dynamics of steady-state experiments in porous media, see figure 1. A description of a similar set-up can also be found from Måløy et al. (Reference Måløy, Moura, Hansen, Flekkøy and Toussaint2021) and Vincent-Dospital et al. (Reference Vincent-Dospital, Moura, Toussaint and Måløy2022). The set-up consists of a modified Hele-Shaw cell (Hele-Shaw Reference Hele-Shaw1898), formed by a porous structure placed between two plexiglass plates. The plates are clamped around the perimeter using screws to give robustness to the set-up and ensure the quasi-two-dimensional (quasi-2-D) geometry. The porous medium is produced via a stereolithographic three-dimensional (3-D) printing technique (Formlabs Form 3 printer) using a transparent printing material (Clear Resin FLGPCL04). This technique allows us to control the geometry of the porous medium and to visualise the fluid dynamics via regular optical imaging. The porous model consists of a monolayer of cylinders with diameter

![]() $a=2$

mm and height

$a=2$

mm and height

![]() $h=0.5$

mm that are distributed according to a Random Sequential Adsorption (RSA) algorithm (Hinrichsen, Feder & Jøssang Reference Hinrichsen, Feder and Jøssang1986). The minimal distance between the cylinders is chosen to be

$h=0.5$

mm that are distributed according to a Random Sequential Adsorption (RSA) algorithm (Hinrichsen, Feder & Jøssang Reference Hinrichsen, Feder and Jøssang1986). The minimal distance between the cylinders is chosen to be

![]() $0.3$

mm. The porous structure has porosity

$0.3$

mm. The porous structure has porosity

![]() $\phi = 0.57$

and dimensions 12 cm × 7 cm, where the first number denotes the length of the network (measured in the inlet–outlet direction) and the second is the width (measured in the perpendicular direction). The absolute permeability of the model was measured to be

$\phi = 0.57$

and dimensions 12 cm × 7 cm, where the first number denotes the length of the network (measured in the inlet–outlet direction) and the second is the width (measured in the perpendicular direction). The absolute permeability of the model was measured to be

![]() $K = 970$

Darcy in a single-phase flow experiment with water. Nine inlet ports are placed along one side of the model and eight outlet ports are placed on the other side. The nine inlet ports feed the fluids into the system. Dyed water (dynamic viscosity

$K = 970$

Darcy in a single-phase flow experiment with water. Nine inlet ports are placed along one side of the model and eight outlet ports are placed on the other side. The nine inlet ports feed the fluids into the system. Dyed water (dynamic viscosity

![]() $\eta _w = 8.9 \times 10^{-4} \, \text{Pa} \boldsymbol{\, }\text{s}$

, density

$\eta _w = 8.9 \times 10^{-4} \, \text{Pa} \boldsymbol{\, }\text{s}$

, density

![]() $\rho _w = 997 \, \text{kg} \boldsymbol{\, }\text{m}^{-3}$

) and light mineral oil (dynamic viscosity

$\rho _w = 997 \, \text{kg} \boldsymbol{\, }\text{m}^{-3}$

) and light mineral oil (dynamic viscosity

![]() $\eta _o = 2.3 \times 10^{-2} \, \text{Pa} \boldsymbol{\, }\text{s}$

, density

$\eta _o = 2.3 \times 10^{-2} \, \text{Pa} \boldsymbol{\, }\text{s}$

, density

![]() $\rho _o = 833 \, \text{kg} \boldsymbol{\, }\text{m}^{-3}$

) were used as the fluid phases introduced in an alternating pattern through the inlets, with five inlets for water and four for oil. The water phase is dyed dark blue by adding a pigment to it (Nigrosin). The odd number of inlet ports ensures a top–bottom symmetry of the boundary condition of the system, although this is not a strict requirement for the experiment. Each inlet is connected to a syringe with the respective fluid. The five water syringes and four oil syringes are then controlled by two separate pumps (Harvard Apparatus PhD Ultra), so that the total flow rate of each fluid phase can be set independently.

$\rho _o = 833 \, \text{kg} \boldsymbol{\, }\text{m}^{-3}$

) were used as the fluid phases introduced in an alternating pattern through the inlets, with five inlets for water and four for oil. The water phase is dyed dark blue by adding a pigment to it (Nigrosin). The odd number of inlet ports ensures a top–bottom symmetry of the boundary condition of the system, although this is not a strict requirement for the experiment. Each inlet is connected to a syringe with the respective fluid. The five water syringes and four oil syringes are then controlled by two separate pumps (Harvard Apparatus PhD Ultra), so that the total flow rate of each fluid phase can be set independently.

A high-resolution camera (Nikon D7100) records the experiment with pictures every 30 s. Preliminary experiments showed that the typical decorrelation time for the dynamics was too fast to be captured with this frame rate, so we opted to employ a secondary machine vision camera (Allied Vision Alvium 1800 U-158c) for filming a smaller segment of the model at a higher frame rate (10 frames per second). The approximate field of view of this second camera is shown in figure 1(b), and figure 1(a) shows an image of the whole experiment taken by the first camera. The core of the analysis in this paper is focused on the data from the machine vision camera, but having access to the high-resolution images of the whole model was also important. It allowed us for instance to guarantee that boundary effects from inlet (channelling due to point injection of the fluids) would dissipate before reaching the field of view of the machine vision camera (Erpelding et al. Reference Erpelding, Sinha, Tallakstad, Hansen, Flekkøy and Måløy2013, Aursjø et al. Reference Aursjø, Erpelding, Tallakstad, Flekkøy, Hansen and Måløy2014). The boundary effects are rate- and fluid-dependent, and we noticed that a higher injection rate results in the inlet channelling effects penetrating deeper into the model, thus rendering a smaller segment of the model under a steady-state condition.

3. Theoretical framework

3.1. Saturations in a Hele-Shaw cell

In this work, we have treated the Hele-Shaw cell as purely two-dimensional. In reality, it has in fact a finite thickness in the

![]() $z$

-direction which can also lead to interesting effects not treated explicitly here such as the formation of capillary bridges and other three-dimensional film flow effects (Moura et al. Reference Moura, Flekkøy, Måløy, Schäfer and Toussaint2019, Reis et al. Reference Reis, Moura, Linga, Rikvold, Toussaint, Flekkøy and Måløy2023, Reference Reis, Linga, Moura, Rikvold, Toussaint, Flekkøy and Måløy2025). In the following discussion, when we use a quantity as a function of

$z$

-direction which can also lead to interesting effects not treated explicitly here such as the formation of capillary bridges and other three-dimensional film flow effects (Moura et al. Reference Moura, Flekkøy, Måløy, Schäfer and Toussaint2019, Reis et al. Reference Reis, Moura, Linga, Rikvold, Toussaint, Flekkøy and Måløy2023, Reference Reis, Linga, Moura, Rikvold, Toussaint, Flekkøy and Måløy2025). In the following discussion, when we use a quantity as a function of

![]() $x, y$

and not of

$x, y$

and not of

![]() $z$

, this implies that we use the average of this quantity over the thickness of the cell.

$z$

, this implies that we use the average of this quantity over the thickness of the cell.

For each

![]() $A=l_{x} \times l_{y}$

surface element, one can at each moment in time measure the areas covered by the more wetting,

$A=l_{x} \times l_{y}$

surface element, one can at each moment in time measure the areas covered by the more wetting,

![]() $A_{w}$

, and the less wetting,

$A_{w}$

, and the less wetting,

![]() $A_{n}$

, fluid phases, identified by the subscripts

$A_{n}$

, fluid phases, identified by the subscripts

![]() $w$

and

$w$

and

![]() $n$

, respectively, as well as of the solid (‘rock’) phase,

$n$

, respectively, as well as of the solid (‘rock’) phase,

![]() $A_{r}$

. The surface area of the pores is

$A_{r}$

. The surface area of the pores is

![]() $A_{p}=A_{w}+A_{n}$

. The porosity is defined by

$A_{p}=A_{w}+A_{n}$

. The porosity is defined by

![]() $\phi \equiv A_{p} / A=A_{p} / (A_{p}+A_{r} )$

. We assume that

$\phi \equiv A_{p} / A=A_{p} / (A_{p}+A_{r} )$

. We assume that

![]() $A_{p}$

and

$A_{p}$

and

![]() $A_{r}$

and, therefore,

$A_{r}$

and, therefore,

![]() $\phi$

are time independent. The saturations of the more and the less wetting fluid phases are defined by

$\phi$

are time independent. The saturations of the more and the less wetting fluid phases are defined by

![]() $S_{w} \equiv A_{w} / A_{p}$

and

$S_{w} \equiv A_{w} / A_{p}$

and

![]() $S_{n} \equiv A_{n} / A_{p}$

. From these definitions, it follows that

$S_{n} \equiv A_{n} / A_{p}$

. From these definitions, it follows that

![]() $S_{w}+S_{n}=1$

, where it is important to note that this is also the case if one or two of the fluid phases are compressible.

$S_{w}+S_{n}=1$

, where it is important to note that this is also the case if one or two of the fluid phases are compressible.

In the formulation of the non-equilibrium thermodynamics of porous media, the so-called representative elementary volume (REV) is employed (Kjelstrup et al. Reference Kjelstrup, Bedeaux, Hansen, Hafskjold and Galteland2018, Reference Kjelstrup, Bedeaux, Hansen, Hafskjold and Galteland2019). For the Hele-Shaw cell, it would be more appropriate to call it the representative elementary surface area. This implies that we use a surface area

![]() $A^{\textit{REV}}=l_{x}^{\textit{REV}} \times l_{y}^{\textit{REV}}$

that is large enough to cover a representative selection of pores, but not larger than that. For each point

$A^{\textit{REV}}=l_{x}^{\textit{REV}} \times l_{y}^{\textit{REV}}$

that is large enough to cover a representative selection of pores, but not larger than that. For each point

![]() $x, y$

in the cell, the representative elementary surface area is defined as the surface area for which

$x, y$

in the cell, the representative elementary surface area is defined as the surface area for which

![]() $x\lt x^{\prime }\lt x+l_{x}^{\textit{REV}}$

and

$x\lt x^{\prime }\lt x+l_{x}^{\textit{REV}}$

and

![]() $y\lt y^{\prime }\lt y+l_{y}^{\textit{REV}}$

. The introduction of the REV makes it possible to give a continuous coarse-grained description of the porous medium in terms of averages over the REV (Kjelstrup et al. Reference Kjelstrup, Bedeaux, Hansen, Hafskjold and Galteland2018, Reference Kjelstrup, Bedeaux, Hansen, Hafskjold and Galteland2019). The fluctuation–dissipation theorems could then be derived (Bedeaux & Kjelstrup Reference Bedeaux and Kjelstrup2021) in terms of such a coarse-grained description.

$y\lt y^{\prime }\lt y+l_{y}^{\textit{REV}}$

. The introduction of the REV makes it possible to give a continuous coarse-grained description of the porous medium in terms of averages over the REV (Kjelstrup et al. Reference Kjelstrup, Bedeaux, Hansen, Hafskjold and Galteland2018, Reference Kjelstrup, Bedeaux, Hansen, Hafskjold and Galteland2019). The fluctuation–dissipation theorems could then be derived (Bedeaux & Kjelstrup Reference Bedeaux and Kjelstrup2021) in terms of such a coarse-grained description.

On the pore level, we introduce the characteristic functions

![]() $s_{w}(x, y, t), s_{n}(x, y, t)$

and

$s_{w}(x, y, t), s_{n}(x, y, t)$

and

![]() $s_{r}(x, y, t)$

. They are equal to 1 in the corresponding phase and 0 in the other phases. Their sum is, by definition, 1 everywhere. The surface areas of the three phases in the REV are given in terms of the characteristic functions by

$s_{r}(x, y, t)$

. They are equal to 1 in the corresponding phase and 0 in the other phases. Their sum is, by definition, 1 everywhere. The surface areas of the three phases in the REV are given in terms of the characteristic functions by

We have

![]() $\phi ^{\textit{REV}} \equiv A_{p}^{\textit{REV}} / A^{\textit{REV}}=A_{p}^{\textit{REV}} / (A_{p}^{\textit{REV}}+A_{r}^{\textit{REV}} )$

. We will assume that

$\phi ^{\textit{REV}} \equiv A_{p}^{\textit{REV}} / A^{\textit{REV}}=A_{p}^{\textit{REV}} / (A_{p}^{\textit{REV}}+A_{r}^{\textit{REV}} )$

. We will assume that

![]() $A_{p}^{\textit{REV}}$

and

$A_{p}^{\textit{REV}}$

and

![]() $A_{r}^{\textit{REV}}$

and, therefore,

$A_{r}^{\textit{REV}}$

and, therefore,

![]() $\phi ^{\textit{REV}}$

are time independent. The saturations of the more and the less wetting fluid phases are defined by

$\phi ^{\textit{REV}}$

are time independent. The saturations of the more and the less wetting fluid phases are defined by

![]() $S_{w}^{\textit{REV}} \equiv A_{w}^{\textit{REV}} / A_{p}^{\textit{REV}}$

and

$S_{w}^{\textit{REV}} \equiv A_{w}^{\textit{REV}} / A_{p}^{\textit{REV}}$

and

![]() $S_{n}^{\textit{REV}} \equiv A_{n}^{\textit{REV}} / A_{p}^{\textit{REV}}$

. From these definitions, it follows that

$S_{n}^{\textit{REV}} \equiv A_{n}^{\textit{REV}} / A_{p}^{\textit{REV}}$

. From these definitions, it follows that

![]() $S_{w}^{\textit{REV}}+S_{n}^{\textit{REV}}=1$

. Both

$S_{w}^{\textit{REV}}+S_{n}^{\textit{REV}}=1$

. Both

![]() $S_{w}^{\textit{REV}}$

and

$S_{w}^{\textit{REV}}$

and

![]() $S_{n}^{\textit{REV}}$

can depend on

$S_{n}^{\textit{REV}}$

can depend on

![]() $(x{,}y{,} t)$

.

$(x{,}y{,} t)$

.

At each point

![]() $x, y$

along the cell, the representative elementary surface area defines the value of the various quantities as averages over the REV. When one measures saturations, one usually considers larger surface areas. For a surface area

$x, y$

along the cell, the representative elementary surface area defines the value of the various quantities as averages over the REV. When one measures saturations, one usually considers larger surface areas. For a surface area

![]() $A_{\textit{xy}}=l_{x} \times l_{y}$

, where

$A_{\textit{xy}}=l_{x} \times l_{y}$

, where

![]() $l_{x}\gt l_{x}^{\textit{REV}}$

and

$l_{x}\gt l_{x}^{\textit{REV}}$

and

![]() $l_{y}\gt l_{y}^{\textit{REV}}$

, one has

$l_{y}\gt l_{y}^{\textit{REV}}$

, one has

for

![]() $j=w, n$

. Similar equalities are valid for all the thermodynamic properties of the larger surface areas.

$j=w, n$

. Similar equalities are valid for all the thermodynamic properties of the larger surface areas.

After a steady-state situation is achieved, the saturations will fluctuate around their average value. These averages can be found using

where

![]() $\tau$

is sufficiently large. The averages of the REV variables are defined in the same way.

$\tau$

is sufficiently large. The averages of the REV variables are defined in the same way.

The quantity in which we are interested is the correlation function of the time derivative of the saturations:

\begin{equation} \begin{aligned} C_{ij}(\Delta x, \Delta y, \Delta t) &\equiv \left \langle \frac {\partial S_{i}(x, y, t)}{\partial t} \frac {\partial S_{\kern-1.5pt j}(x+\Delta x, y+\Delta y, t+\Delta t)}{\partial t} \right \rangle \\ &= \frac {1}{\tau } \int _{t}^{t+\tau } {\rm d}t' \frac {\partial S_{i}\big(x, y, t' \big)}{\partial t'} \frac {\partial S_{\kern-1.5pt j}\big(x+\Delta x, y+\Delta y, t'+\Delta t \big)}{\partial t'} \:. \end{aligned} \end{equation}

\begin{equation} \begin{aligned} C_{ij}(\Delta x, \Delta y, \Delta t) &\equiv \left \langle \frac {\partial S_{i}(x, y, t)}{\partial t} \frac {\partial S_{\kern-1.5pt j}(x+\Delta x, y+\Delta y, t+\Delta t)}{\partial t} \right \rangle \\ &= \frac {1}{\tau } \int _{t}^{t+\tau } {\rm d}t' \frac {\partial S_{i}\big(x, y, t' \big)}{\partial t'} \frac {\partial S_{\kern-1.5pt j}\big(x+\Delta x, y+\Delta y, t'+\Delta t \big)}{\partial t'} \:. \end{aligned} \end{equation}

We focus now on the part of the Hele-Shaw cell that is homogeneous so that this time average only depends on

![]() $\Delta x, \Delta y, \Delta t$

. This is away from the inlet along the centre of the cell. The spatial range of the correlations is small compared with the size of the REV. Let us then consider the quantity

$\Delta x, \Delta y, \Delta t$

. This is away from the inlet along the centre of the cell. The spatial range of the correlations is small compared with the size of the REV. Let us then consider the quantity

![]() $C_{\textit{ij}}(\Delta t)$

as the spatial integral of the correlation function, i.e.

$C_{\textit{ij}}(\Delta t)$

as the spatial integral of the correlation function, i.e.

In the non-homogeneous part of the Hele-Shaw cell (for instance, close to the inlets),

![]() $C_{\textit{ij}}$

would also depend on

$C_{\textit{ij}}$

would also depend on

![]() $x, y$

. In the case of systems without memory, the temporal range of the correlations is also small, and it is then appropriate to also integrate over

$x, y$

. In the case of systems without memory, the temporal range of the correlations is also small, and it is then appropriate to also integrate over

![]() $\Delta t$

:

$\Delta t$

:

Since the sum of saturations adds to one, there is only one independent saturation. It then follows that

and for the spatially integrated form,

For two fluids, this gives

and

These properties are true whether the fluids are compressible or not. The relations are compatible with a property of dependent variables; the determinant of their coefficient matrix is zero.

3.2. Incompressible fluids

The mass densities for incompressible fluids in the REV are related to the saturations by

where

![]() $\rho _{i}$

are the constant mass densities of the pure fluid phases. Furthermore, we assume that the porosity

$\rho _{i}$

are the constant mass densities of the pure fluid phases. Furthermore, we assume that the porosity

![]() $\phi$

does not depend on

$\phi$

does not depend on

![]() $x, y$

.

$x, y$

.

The mass balance is

For the saturations of incompressible fluid phases, this implies

For a surface area

![]() $A_{x y}=l_{x} \times l_{y}$

, where

$A_{x y}=l_{x} \times l_{y}$

, where

![]() $l_{x}\gt l_{x}^{\textit{REV}}$

and

$l_{x}\gt l_{x}^{\textit{REV}}$

and

![]() $l_{y}\gt l_{y}^{\textit{REV}}$

, (3.2) together with (3.13) gives for incompressible fluid phases,

$l_{y}\gt l_{y}^{\textit{REV}}$

, (3.2) together with (3.13) gives for incompressible fluid phases,

\begin{equation} \begin{aligned} A_{x y}\frac {\partial S_{i}(x, y, t)}{\partial t} & = \int _{x}^{x+l_{x}} {\rm d} x^{\prime } \int _{y}^{y+l_{y}} {\rm d} y^{\prime } \frac {\partial S_{i}^{\textit{REV}}\left (x^{\prime }, y^{\prime }, t\right )}{\partial t} \\ & =-\frac {1}{\rho _{i} \phi } \int _{x}^{x+l_{x}} {\rm d} x^{\prime } \int _{y}^{y+l_{y}} {\rm d} y^{\prime } \operatorname {div} \boldsymbol{J}_{i}^{\textit{REV}}\left (x^{\prime }, y^{\prime }, t\right ) \\ & =\frac {1}{\rho _{i} \phi } \left \{\int _{y}^{y+l_{y}} {\rm d} y^{\prime }\left [J_{i, x}^{\textit{REV}}\left (x, y^{\prime }, t\right )-J_{i, x}^{\textit{REV}}\left (x+l_{x}, y^{\prime }, t\right )\right ]\right . \\ & +\left .\int _{x}^{x+l_{x}} {\rm d} x^{\prime }\left [J_{i, y}^{\textit{REV}}\left (x^{\prime }, y, t\right )-J_{i, y}^{\textit{REV}}\left (x^{\prime }, y+l_{y}, t\right )\right ]\right \} \! . \end{aligned} \end{equation}

\begin{equation} \begin{aligned} A_{x y}\frac {\partial S_{i}(x, y, t)}{\partial t} & = \int _{x}^{x+l_{x}} {\rm d} x^{\prime } \int _{y}^{y+l_{y}} {\rm d} y^{\prime } \frac {\partial S_{i}^{\textit{REV}}\left (x^{\prime }, y^{\prime }, t\right )}{\partial t} \\ & =-\frac {1}{\rho _{i} \phi } \int _{x}^{x+l_{x}} {\rm d} x^{\prime } \int _{y}^{y+l_{y}} {\rm d} y^{\prime } \operatorname {div} \boldsymbol{J}_{i}^{\textit{REV}}\left (x^{\prime }, y^{\prime }, t\right ) \\ & =\frac {1}{\rho _{i} \phi } \left \{\int _{y}^{y+l_{y}} {\rm d} y^{\prime }\left [J_{i, x}^{\textit{REV}}\left (x, y^{\prime }, t\right )-J_{i, x}^{\textit{REV}}\left (x+l_{x}, y^{\prime }, t\right )\right ]\right . \\ & +\left .\int _{x}^{x+l_{x}} {\rm d} x^{\prime }\left [J_{i, y}^{\textit{REV}}\left (x^{\prime }, y, t\right )-J_{i, y}^{\textit{REV}}\left (x^{\prime }, y+l_{y}, t\right )\right ]\right \} \! . \end{aligned} \end{equation}

The subscripts indicate the

![]() $x$

or

$x$

or

![]() $y$

component of the fluxes. The aim is to substitute (3.14) into (3.4) and to use the fluctuation–dissipation theorems for the mass fluxes, to obtain similar theorems for the fluctuation of the time derivative of the saturations. In the next section, we discuss the relevant equations for the mass fluxes.

$y$

component of the fluxes. The aim is to substitute (3.14) into (3.4) and to use the fluctuation–dissipation theorems for the mass fluxes, to obtain similar theorems for the fluctuation of the time derivative of the saturations. In the next section, we discuss the relevant equations for the mass fluxes.

3.3. Fluctuation–dissipation theorems for the mass fluxes

We restrict ourselves to the case where the temperature

![]() $T$

in the Hele-Shaw cell is everywhere constant. For this case, the linear flux–force relations for independent mass fluxes with memory (De Groot & Mazur Reference De Groot and Mazur2003; Bedeaux et al. Reference Bedeaux, Kjelstrup, Berg, Alfazazi and Armstrong2025) are given by

$T$

in the Hele-Shaw cell is everywhere constant. For this case, the linear flux–force relations for independent mass fluxes with memory (De Groot & Mazur Reference De Groot and Mazur2003; Bedeaux et al. Reference Bedeaux, Kjelstrup, Berg, Alfazazi and Armstrong2025) are given by

\begin{equation} \boldsymbol{J}_{i}^{\textit{REV}}(x, y, t)=\int _{-\infty }^{\infty } \sum _{j=1}^{n} L_{ij}\big (t-t^{\prime }\big ) \boldsymbol{X}_{j}^{q}\big (x, y, t^{\prime }\big ) {\rm d} t^{\prime } . \end{equation}

\begin{equation} \boldsymbol{J}_{i}^{\textit{REV}}(x, y, t)=\int _{-\infty }^{\infty } \sum _{j=1}^{n} L_{ij}\big (t-t^{\prime }\big ) \boldsymbol{X}_{j}^{q}\big (x, y, t^{\prime }\big ) {\rm d} t^{\prime } . \end{equation}

In this expression,

![]() $\boldsymbol{J}_{i}^{\textit{REV}}$

are the mass fluxes,

$\boldsymbol{J}_{i}^{\textit{REV}}$

are the mass fluxes,

![]() $L_{ij}$

are the generalised Onsager coefficients (memory kernel) and

$L_{ij}$

are the generalised Onsager coefficients (memory kernel) and

![]() $\boldsymbol{X}_{j}^{q} (x, y, t^{\prime } ) \equiv -({1}/{T}) \operatorname {grad} \mu _{j, T} (x, y, t^{\prime } )$

are the conjugate thermodynamic forces. The gradient of the chemical potentials is taken, keeping the temperature constant. This is indicated by the subscript

$\boldsymbol{X}_{j}^{q} (x, y, t^{\prime } ) \equiv -({1}/{T}) \operatorname {grad} \mu _{j, T} (x, y, t^{\prime } )$

are the conjugate thermodynamic forces. The gradient of the chemical potentials is taken, keeping the temperature constant. This is indicated by the subscript

![]() $T$

on the chemical potential. Equation (3.15) gives the fluxes on the coarse-grained level. To describe fluctuations, we need to add a random contribution (Bedeaux & Kjelstrup Reference Bedeaux and Kjelstrup2021; Bedeaux et al. Reference Bedeaux, Kjelstrup and Schnell2024, Reference Bedeaux, Kjelstrup, Berg, Alfazazi and Armstrong2025)

$T$

on the chemical potential. Equation (3.15) gives the fluxes on the coarse-grained level. To describe fluctuations, we need to add a random contribution (Bedeaux & Kjelstrup Reference Bedeaux and Kjelstrup2021; Bedeaux et al. Reference Bedeaux, Kjelstrup and Schnell2024, Reference Bedeaux, Kjelstrup, Berg, Alfazazi and Armstrong2025)

where the average of the random contribution in the last term is zero. The correlation functions are according to the fluctuation–dissipation theorems given by

where

![]() $\boldsymbol{1}$

is a unit tensor and

$\boldsymbol{1}$

is a unit tensor and

![]() $\kappa _B$

is a proportionality factor defined via (3.17) (

$\kappa _B$

is a proportionality factor defined via (3.17) (

![]() $\kappa _B$

is further discussed in § 4.5). We assume the spatial correlations to be short-range compared with the macroscale phenomena which gives the

$\kappa _B$

is further discussed in § 4.5). We assume the spatial correlations to be short-range compared with the macroscale phenomena which gives the

![]() $\delta (x^{\prime }-x ) \delta (y^{\prime }-y )$

term. When the Hele-Shaw cell is homogeneous, the matrix

$\delta (x^{\prime }-x ) \delta (y^{\prime }-y )$

term. When the Hele-Shaw cell is homogeneous, the matrix

![]() $L_{\textit{ij}}$

is independent of

$L_{\textit{ij}}$

is independent of

![]() $x, y$

. In the actual experimental cell, this is only the case away from the inlet along the central part of the model. For a stationary system,

$x, y$

. In the actual experimental cell, this is only the case away from the inlet along the central part of the model. For a stationary system,

![]() $L_{\textit{ij}}$

only depends on

$L_{\textit{ij}}$

only depends on

![]() $ (t^{\prime }-t )$

, and not on both

$ (t^{\prime }-t )$

, and not on both

![]() $t$

and

$t$

and

![]() $ (t^{\prime }-t )$

. The matrix of Onsager coefficients is symmetric giving rise to the factor 2 in (3.17). This symmetry was observed in network simulations (Winkler et al. Reference Winkler, Gjennestad, Bedeaux, Kjelstrup, Cabriolu and Hansen2020). For dependent fluxes, the determinant of the coefficient matrix is equal to zero, see (3.10). Onsager proved the symmetry of the coefficient matrix using independent fluxes. Coefficient symmetry can be maintained by construction (De Groot & Mazur Reference De Groot and Mazur2003) for dependent fluxes like here.

$ (t^{\prime }-t )$

. The matrix of Onsager coefficients is symmetric giving rise to the factor 2 in (3.17). This symmetry was observed in network simulations (Winkler et al. Reference Winkler, Gjennestad, Bedeaux, Kjelstrup, Cabriolu and Hansen2020). For dependent fluxes, the determinant of the coefficient matrix is equal to zero, see (3.10). Onsager proved the symmetry of the coefficient matrix using independent fluxes. Coefficient symmetry can be maintained by construction (De Groot & Mazur Reference De Groot and Mazur2003) for dependent fluxes like here.

3.4. Correlations for the saturation derivatives

Substitution of (3.14) into (3.4) and use of (3.17) for incompressible fluid phases gives, after some algebra,

\begin{equation} \begin{aligned} C_{\textit{ij}}(\Delta x, \Delta y, \Delta t) &\equiv \left \langle \frac {\partial S_{i}(x, y, t)}{\partial t} \frac {\partial S_{\kern-1.5pt j}(x+\Delta x, y+\Delta y, t+\Delta t)}{\partial t}\right \rangle \\ &= \frac {8 \kappa _B L_{\textit{ij}}(\Delta t)}{\rho _{i} \rho _{j} \phi ^{2} A_{\textit{xy}}} \delta (\Delta x) \delta (\Delta y). \end{aligned} \end{equation}

\begin{equation} \begin{aligned} C_{\textit{ij}}(\Delta x, \Delta y, \Delta t) &\equiv \left \langle \frac {\partial S_{i}(x, y, t)}{\partial t} \frac {\partial S_{\kern-1.5pt j}(x+\Delta x, y+\Delta y, t+\Delta t)}{\partial t}\right \rangle \\ &= \frac {8 \kappa _B L_{\textit{ij}}(\Delta t)}{\rho _{i} \rho _{j} \phi ^{2} A_{\textit{xy}}} \delta (\Delta x) \delta (\Delta y). \end{aligned} \end{equation}

Equation (3.5) subsequently gives

We shall use this to obtain the conductivity matrix

![]() $L_{\textit{ij}}$

from the fluctuations of the time derivative of the saturation. With (3.10), it follows that

$L_{\textit{ij}}$

from the fluctuations of the time derivative of the saturation. With (3.10), it follows that

This equation means that if we measure one coefficient, we know all of them. This property of the Onsager matrix follows from the relationship imposed upon the fluxes. There is only one flux–force product contributing to the entropy production (energy dissipation), because there is only one driving force. Any extended expression in terms of two fluxes (still only one driving force) leads to the inter-dependency of coefficients. A gradient in saturation gives a second driving force. Without this, an expansion of the flux description to describe two-component flow will give an undetermined set of coefficients. As a consequence, the matrix of the

![]() $L_{\textit{ij}}$

-coefficients cannot be inverted, as the determinant of the coefficient matrix is zero. Nevertheless, the various coefficients are included here because they can be used to make a link to the common relative permeability model, an idea we further explore in the next section.

$L_{\textit{ij}}$

-coefficients cannot be inverted, as the determinant of the coefficient matrix is zero. Nevertheless, the various coefficients are included here because they can be used to make a link to the common relative permeability model, an idea we further explore in the next section.

If the temporal range of correlations is small, it is convenient to integrate over

![]() $\Delta t$

. From (3.19) and (3.6), we have

$\Delta t$

. From (3.19) and (3.6), we have

In this limit of fast decaying temporal correlations, we can make the assumption

![]() $L_{\textit{ij}}(\Delta t) = L_{\textit{ij}}\delta (\Delta t)$

. We then have

$L_{\textit{ij}}(\Delta t) = L_{\textit{ij}}\delta (\Delta t)$

. We then have

Using this equation, the product

![]() $\kappa _B L_{\textit{ij}}$

can be obtained from the measurement of

$\kappa _B L_{\textit{ij}}$

can be obtained from the measurement of

![]() $\varLambda _{\textit{ij}}^\infty$

, i.e. the time integral of the correlation function of saturation derivatives. We will later see that the measurement of

$\varLambda _{\textit{ij}}^\infty$

, i.e. the time integral of the correlation function of saturation derivatives. We will later see that the measurement of

![]() $\varLambda _{\textit{ij}}^\infty$

itself presents some challenges and we will show how a related quantity

$\varLambda _{\textit{ij}}^\infty$

itself presents some challenges and we will show how a related quantity

![]() $\varLambda _{\textit{ij}}^*$

can be used instead. We have focused on the wetting phase saturation

$\varLambda _{\textit{ij}}^*$

can be used instead. We have focused on the wetting phase saturation

![]() $S_w$

, from which we aim to access

$S_w$

, from which we aim to access

![]() $L_{ww}$

as discussed. The other coefficients can be obtained in the case of incompressible fluids via (3.20). A different approach was taken recently by Alfazazi et al. (Reference Alfazazi, Bedeaux, Kjelstrup, Moura, Ebadi, Mostaghimi, McClure and Armstrong2024), where the authors considered the full volume flux composed of both fluid phases instead, as a pathway to recover the Onsager coefficient.

$L_{ww}$

as discussed. The other coefficients can be obtained in the case of incompressible fluids via (3.20). A different approach was taken recently by Alfazazi et al. (Reference Alfazazi, Bedeaux, Kjelstrup, Moura, Ebadi, Mostaghimi, McClure and Armstrong2024), where the authors considered the full volume flux composed of both fluid phases instead, as a pathway to recover the Onsager coefficient.

3.5. Flux–force relations and a link with the relative permeability model

We present here some key concepts necessary to establish a link between the classical relative permeability theory of two-phase porous media flows (Bear Reference Bear1972) and a description using non-equilibrium thermodynamics (NET). More details can be found from Kjelstrup et al. (Reference Kjelstrup, Bedeaux, Hansen, Hafskjold and Galteland2019) and Bedeaux et al. (Reference Bedeaux, Kjelstrup and Schnell2024).

It is common to express the Darcy velocity of the separate fluid phases by

where, as usual,

![]() $u_i$

is the Darcy velocity,

$u_i$

is the Darcy velocity,

![]() $V_i$

is the partial molar volume in the REV (see definition later in (3.29)),

$V_i$

is the partial molar volume in the REV (see definition later in (3.29)),

![]() $k_{ri}$

denotes the relative permeability,

$k_{ri}$

denotes the relative permeability,

![]() $K$

is the absolute permeability of the porous medium,

$K$

is the absolute permeability of the porous medium,

![]() $\eta _i$

is the dynamic viscosity and

$\eta _i$

is the dynamic viscosity and

![]() $p_i$

is the pressure of the single bulk phase. In all quantities, the subindex

$p_i$

is the pressure of the single bulk phase. In all quantities, the subindex

![]() $i$

denotes the specific phase (wetting or non-wetting). The pressure gradients of the separate phases have been used.

$i$

denotes the specific phase (wetting or non-wetting). The pressure gradients of the separate phases have been used.

The entropy production is composed of two flux–force products

Its value is invariant to change of variables. Here,

![]() $J_w$

and

$J_w$

and

![]() $J_n$

are the fluxes of wetting and non-wetting components, respectively, in mol (m

$J_n$

are the fluxes of wetting and non-wetting components, respectively, in mol (m

![]() $^2$

s)−1,

$^2$

s)−1,

![]() $T$

is the temperature and

$T$

is the temperature and

![]() $\mu _w, \mu _n$

in a thermodynamic description are the component chemical potentials. (The notation for the chemical potential should not be confused with the dynamic viscosity which is typically associated with the letter

$\mu _w, \mu _n$

in a thermodynamic description are the component chemical potentials. (The notation for the chemical potential should not be confused with the dynamic viscosity which is typically associated with the letter

![]() $\mu$

. Here, we use the symbol

$\mu$

. Here, we use the symbol

![]() $\eta$

for viscosity.) The gradients in these give the driving forces. For all practical purposes, we are working with isothermal systems. The REV is a small thermodynamic system with the temperature controlled by the environment and we can measure its value in a normal way (Bedeaux et al. Reference Bedeaux, Kjelstrup and Schnell2024). It follows that the linear flux–force relations can be written as

$\eta$

for viscosity.) The gradients in these give the driving forces. For all practical purposes, we are working with isothermal systems. The REV is a small thermodynamic system with the temperature controlled by the environment and we can measure its value in a normal way (Bedeaux et al. Reference Bedeaux, Kjelstrup and Schnell2024). It follows that the linear flux–force relations can be written as

To connect the two descriptions, we shall use as definition of the pressure gradient of a single phase

![]() $i=w,n$

,

$i=w,n$

,

where

![]() $\mu _i$

contains the effect of possible saturation variations as well as capillary pressures. The value of

$\mu _i$

contains the effect of possible saturation variations as well as capillary pressures. The value of

![]() $\mu _i$

can be accessed by equilibrium conditions for the chemical potential at the REV boundary. The

$\mu _i$

can be accessed by equilibrium conditions for the chemical potential at the REV boundary. The

![]() $V_i$

is the partial molar volume of

$V_i$

is the partial molar volume of

![]() $i$

or partial specific volume in the REV. It is defined for the REV by

$i$

or partial specific volume in the REV. It is defined for the REV by

\begin{equation} V_i \equiv \left ( \frac {{\rm d}V^{\textit{REV}}}{{\rm d}N_i^{\textit{REV}}} \right )_{T, N_j^{\textit{REV}}} . \end{equation}

\begin{equation} V_i \equiv \left ( \frac {{\rm d}V^{\textit{REV}}}{{\rm d}N_i^{\textit{REV}}} \right )_{T, N_j^{\textit{REV}}} . \end{equation}

The value of

![]() $V_i$

can be computed when the REV composition is varied,

$V_i$

can be computed when the REV composition is varied,

![]() $V^{\textit{REV}}$

being the volume (here, an area since we work in 2-D) of the REV and

$V^{\textit{REV}}$

being the volume (here, an area since we work in 2-D) of the REV and

![]() $N_i$

being the number of moles of phase

$N_i$

being the number of moles of phase

![]() $i$

in the REV. For definitions of other thermodynamic variables of the REV, we refer to Bedeaux & Kjelstrup (Reference Bedeaux and Kjelstrup2021) and Bedeaux et al. (Reference Bedeaux, Kjelstrup and Schnell2024).

$i$

in the REV. For definitions of other thermodynamic variables of the REV, we refer to Bedeaux & Kjelstrup (Reference Bedeaux and Kjelstrup2021) and Bedeaux et al. (Reference Bedeaux, Kjelstrup and Schnell2024).

The resulting fluxes in (3.26) and (3.27) can then be written as

Only independent, extensive variables can be used. An isothermal system of two components has the entropy production

![]() $\sigma _s$

. The entropy production is invariant, i.e. it does not depend on the choice of variable set. When the assumptions

$\sigma _s$

. The entropy production is invariant, i.e. it does not depend on the choice of variable set. When the assumptions

![]() $\boldsymbol{\nabla} \kern-1pt p_w = \boldsymbol{\nabla} \kern-1pt p_n = \boldsymbol{\nabla} \kern-1pt p$

hold, we obtain

$\boldsymbol{\nabla} \kern-1pt p_w = \boldsymbol{\nabla} \kern-1pt p_n = \boldsymbol{\nabla} \kern-1pt p$

hold, we obtain

where the volume flux is given by

The single flux–force relation becomes

and the coefficient

![]() $L_{\textit{VV}}$

is given by

$L_{\textit{VV}}$

is given by

The expression for the coefficient

![]() $L_{\textit{VV}}$

includes contributions from the four coefficients

$L_{\textit{VV}}$

includes contributions from the four coefficients

![]() $L_{ij}$

and corresponding partial molar volumes

$L_{ij}$

and corresponding partial molar volumes

![]() $V_i$

. Following Alfazazi et al. (Reference Alfazazi, Bedeaux, Kjelstrup, Moura, Ebadi, Mostaghimi, McClure and Armstrong2024), we have that using (3.23), (3.33) and (3.34), a link between the total mobility and the coefficient

$V_i$

. Following Alfazazi et al. (Reference Alfazazi, Bedeaux, Kjelstrup, Moura, Ebadi, Mostaghimi, McClure and Armstrong2024), we have that using (3.23), (3.33) and (3.34), a link between the total mobility and the coefficient

![]() $L_{\textit{VV}}$

is found, i.e.

$L_{\textit{VV}}$

is found, i.e.

Alfazazi et al. (Reference Alfazazi, Bedeaux, Kjelstrup, Moura, Ebadi, Mostaghimi, McClure and Armstrong2024) employed this expression to test the predictability potential of the fluctuation–dissipation approach to estimate the total mobility in a series of experiments in which the pressure drop across the sample was systematically varied.

In the steady-state experiments considered here, the saturation is expected to be homogeneous across the sample (apart from regions close to the inlet and outlet boundaries where capillary end effects might occur). The capillary pressure

![]() $p_c = p_n - p_w$

is also expected to be spatially constant, which means that the assumption

$p_c = p_n - p_w$

is also expected to be spatially constant, which means that the assumption

![]() $\boldsymbol{\nabla} \kern-1pt p_w = \boldsymbol{\nabla} \kern-1pt p_n = \boldsymbol{\nabla} \kern-1pt p$

should hold. If we consider however a situation in which the pressure gradients are not necessarily the same, i.e.

$\boldsymbol{\nabla} \kern-1pt p_w = \boldsymbol{\nabla} \kern-1pt p_n = \boldsymbol{\nabla} \kern-1pt p$

should hold. If we consider however a situation in which the pressure gradients are not necessarily the same, i.e.

![]() $\boldsymbol{\nabla} \kern-1pt p_w \neq \boldsymbol{\nabla} \kern-1pt p_n$

, then by using (3.30) and (3.31) for

$\boldsymbol{\nabla} \kern-1pt p_w \neq \boldsymbol{\nabla} \kern-1pt p_n$

, then by using (3.30) and (3.31) for

![]() $J_w$

and

$J_w$

and

![]() $J_n$

in (3.23) and (3.24), we have

$J_n$

in (3.23) and (3.24), we have

This is a homogeneous system of equations for the pair

![]() $(\boldsymbol{\nabla} \kern-1pt p_w,\boldsymbol{\nabla} \kern-1pt p_n)$

. For a non-trivial solution to exist, we need the determinant of the matrix of coefficients to be zero. In this simple 2 × 2 system, this can also be seen by isolating

$(\boldsymbol{\nabla} \kern-1pt p_w,\boldsymbol{\nabla} \kern-1pt p_n)$

. For a non-trivial solution to exist, we need the determinant of the matrix of coefficients to be zero. In this simple 2 × 2 system, this can also be seen by isolating

![]() $\boldsymbol{\nabla} \kern-1pt p_n$

from the second equation and substituting in the first one. This leads us to the following link between the two relative permeabilities and the Onsager coefficients:

$\boldsymbol{\nabla} \kern-1pt p_n$

from the second equation and substituting in the first one. This leads us to the following link between the two relative permeabilities and the Onsager coefficients:

\begin{equation} \begin{aligned} \left (\frac {k_{rw}K}{\mu _w} - \frac {V_wL_{ww}V_w}{T}\right ) \left (\frac {k_{rn}K}{\mu _n} - \frac {V_nL_{\textit{nn}}V_n}{T}\right ) \\ = \left ( \frac {V_wL_{wn}V_n}{T} \right ) \left ( \frac {V_wL_{nw}V_n}{T} \right ) . \end{aligned} \end{equation}

\begin{equation} \begin{aligned} \left (\frac {k_{rw}K}{\mu _w} - \frac {V_wL_{ww}V_w}{T}\right ) \left (\frac {k_{rn}K}{\mu _n} - \frac {V_nL_{\textit{nn}}V_n}{T}\right ) \\ = \left ( \frac {V_wL_{wn}V_n}{T} \right ) \left ( \frac {V_wL_{nw}V_n}{T} \right ) . \end{aligned} \end{equation}

Equation (3.39) provides an interesting connection between the multiphase Darcy approach and the approach we have outline here based on non-equilibrium thermodynamics. If one of the relative permeabilities is known and the Onsager matrix is measured, the other relative permeability can be obtained via this equation. Interestingly, this equation seems to predict the correct qualitative dependence between the separate relative permeabilities: if

![]() $k_{rn}$

increases,

$k_{rn}$

increases,

![]() $k_{rw}$

decreases and vice versa. However, the assumption that

$k_{rw}$

decreases and vice versa. However, the assumption that

![]() $\boldsymbol{\nabla} \kern-1pt p_w \neq \boldsymbol{\nabla} \kern-1pt p_n$

naturally leads to a gradient in capillary pressure

$\boldsymbol{\nabla} \kern-1pt p_w \neq \boldsymbol{\nabla} \kern-1pt p_n$

naturally leads to a gradient in capillary pressure

![]() $\boldsymbol{\nabla} \kern-1pt p_c = \boldsymbol{\nabla} \kern-1pt p_n - \boldsymbol{\nabla} \kern-1pt p_w \neq 0$

, a situation that is typically not observed in a steady-state scenario with an homogeneous system in the absence of gravitational effects. This could be the case though in a transient flow regime or in a regime where the porous medium presents a gradient in pore structure or wettability, something which naturally leads to a gradient in saturation and capillary pressure. Gradients in capillary pressure are also expected when other body forces are in place, for instance, gravitational forces (Birovljev et al. Reference Birovljev, Furuberg, Feder, Jøssang, Måløy and Aharony1991; Méheust et al. Reference Méheust, Løvoll, Måløy and Schmittbuhl2002; Løvoll et al. Reference Løvoll, Méheust, Måløy, Aker and Schmittbuhl2005). In the framework described by Bedeaux et al. (Reference Bedeaux, Kjelstrup and Schnell2024), the effective pressure of an REV or representative elementary area (REA) accounts for gradients in saturation and the resulting capillary pressure variations. This effective pressure plays a crucial role in the driving force of transport at the continuum scale, integrating saturation gradients into the macroscopic description of flow.

$\boldsymbol{\nabla} \kern-1pt p_c = \boldsymbol{\nabla} \kern-1pt p_n - \boldsymbol{\nabla} \kern-1pt p_w \neq 0$

, a situation that is typically not observed in a steady-state scenario with an homogeneous system in the absence of gravitational effects. This could be the case though in a transient flow regime or in a regime where the porous medium presents a gradient in pore structure or wettability, something which naturally leads to a gradient in saturation and capillary pressure. Gradients in capillary pressure are also expected when other body forces are in place, for instance, gravitational forces (Birovljev et al. Reference Birovljev, Furuberg, Feder, Jøssang, Måløy and Aharony1991; Méheust et al. Reference Méheust, Løvoll, Måløy and Schmittbuhl2002; Løvoll et al. Reference Løvoll, Méheust, Måløy, Aker and Schmittbuhl2005). In the framework described by Bedeaux et al. (Reference Bedeaux, Kjelstrup and Schnell2024), the effective pressure of an REV or representative elementary area (REA) accounts for gradients in saturation and the resulting capillary pressure variations. This effective pressure plays a crucial role in the driving force of transport at the continuum scale, integrating saturation gradients into the macroscopic description of flow.

The formulation presented here provides a framework for linking non-equilibrium thermodynamics to the multiphase Darcy model. Although some aspects are still not fully understood in the relationship between thermodynamic properties at the REV scale and macroscopic permeability, we believe that this approach offers a promising avenue for bridging these concepts. The interpretation of quantities, such as the chemical potential and its various contributions contained in (3.28), and their role in macroscopic flow models remains an active area of investigation, both in terms of theoretical development and experimental validation.

4. Results and discussions

4.1. Experimental dynamics, phase redistribution and fluctuations in saturation

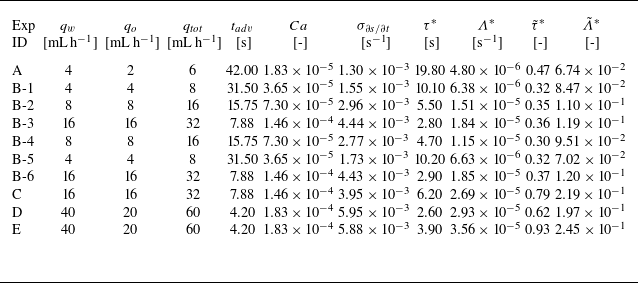

Using the set-up shown in figure 1, we performed a series of experiments in which both water and mineral oil phases were co-injected at the respective injection rates

![]() $q_w$

and

$q_w$

and

![]() $q_o$

. If the injection rate was too low, the experiment would take a very long time to reach a steady-state situation and the fluid transport would be dominated either by connected pathways linking the inlet to outlet or by flow through thin films covering the surface of the grains (Aursjø et al. Reference Aursjø, Erpelding, Tallakstad, Flekkøy, Hansen and Måløy2014). These dynamic states would look frozen to the camera, i.e. we would not see a clear dynamics of moving clusters and there would be no observable temporal fluctuations of saturation, something needed for the computation proposed in (3.18). However, if the injection rate were set to a very high value, we would require a very high acquisition rate from the cameras to capture the temporal dynamics at a rate fast enough to perform the saturation analysis (see, for instance, Avraam & Payatakes Reference Avraam and Payatakes1995; Armstrong et al. Reference Armstrong, McClure, Berrill, Rücker, Schlüter and Berg2016 for considerations on different flow regimes). To accommodate for these constraints, we have focused on experiments for which the total flow rate

$q_o$

. If the injection rate was too low, the experiment would take a very long time to reach a steady-state situation and the fluid transport would be dominated either by connected pathways linking the inlet to outlet or by flow through thin films covering the surface of the grains (Aursjø et al. Reference Aursjø, Erpelding, Tallakstad, Flekkøy, Hansen and Måløy2014). These dynamic states would look frozen to the camera, i.e. we would not see a clear dynamics of moving clusters and there would be no observable temporal fluctuations of saturation, something needed for the computation proposed in (3.18). However, if the injection rate were set to a very high value, we would require a very high acquisition rate from the cameras to capture the temporal dynamics at a rate fast enough to perform the saturation analysis (see, for instance, Avraam & Payatakes Reference Avraam and Payatakes1995; Armstrong et al. Reference Armstrong, McClure, Berrill, Rücker, Schlüter and Berg2016 for considerations on different flow regimes). To accommodate for these constraints, we have focused on experiments for which the total flow rate

![]() $q_{\textit{tot}} = q_w + q_o$

varied between

$q_{\textit{tot}} = q_w + q_o$

varied between

![]() $6\, {\rm mL\,h^{- 1}}$

and

$6\, {\rm mL\,h^{- 1}}$

and

![]() $60\, {\rm mL\,h^{- 1}}$

. We will focus initially on a single experiment for which

$60\, {\rm mL\,h^{- 1}}$

. We will focus initially on a single experiment for which

![]() $q_w = q_o = 16\, {\rm mL\,h^{- 1}}$

and postpone the analysis of multiple experiments to § 4.6. The capillary number can be estimated as

$q_w = q_o = 16\, {\rm mL\,h^{- 1}}$

and postpone the analysis of multiple experiments to § 4.6. The capillary number can be estimated as

![]() $Ca = \eta _o q_o/(A^x\gamma )$

, where

$Ca = \eta _o q_o/(A^x\gamma )$

, where

![]() $\gamma$

is the oil–water interfacial tension and

$\gamma$

is the oil–water interfacial tension and

![]() $A^x = 35\, \text{mm}^2$

is the cross-sectional area of the model. We have not measured

$A^x = 35\, \text{mm}^2$

is the cross-sectional area of the model. We have not measured

![]() $\gamma$

for the particular fluid pair in the system, but using a typical value of

$\gamma$

for the particular fluid pair in the system, but using a typical value of

![]() $\gamma \, \approx 20\, {\rm mN\,m^{- 1}}$

would give

$\gamma \, \approx 20\, {\rm mN\,m^{- 1}}$

would give

![]() $Ca \approx 1.5\times 10^{-4}$

. The flow is thus in a domain where viscous forces are expected to be rather significant. The typical advective time can be computed as

$Ca \approx 1.5\times 10^{-4}$

. The flow is thus in a domain where viscous forces are expected to be rather significant. The typical advective time can be computed as

![]() $t_{\textit{ad}v} = a A^x/q_{\textit{tot}} = 7.9\, \text{s}$

. We consider the cylinder diameter

$t_{\textit{ad}v} = a A^x/q_{\textit{tot}} = 7.9\, \text{s}$

. We consider the cylinder diameter

![]() $a = 2\, \text{mm}$

as the typical length scale for the problem, being also an approximate value for the typical pore size.

$a = 2\, \text{mm}$

as the typical length scale for the problem, being also an approximate value for the typical pore size.

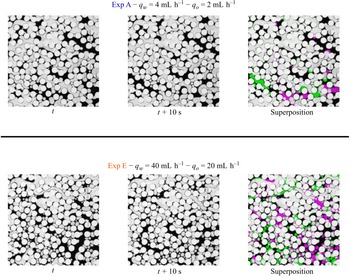

Figure 2. Fluctuation in phase saturation in the porous medium. The diameter of the cylinders is

![]() $2$

mm. (a) Two snapshots of the experiment separated by

$2$

mm. (a) Two snapshots of the experiment separated by

![]() $10$

s. On average, the flow is from left to right. (b) Composition of the two snapshots highlighting the regions where fluid reconfiguration has occurred. In green, we see points that were oil-filled and became water-filled and in magenta points that were water-filled that became oil-filled.

$10$

s. On average, the flow is from left to right. (b) Composition of the two snapshots highlighting the regions where fluid reconfiguration has occurred. In green, we see points that were oil-filled and became water-filled and in magenta points that were water-filled that became oil-filled.

The experiment is initiated with the model completely saturated by the water phase. The two water and oil pumps are then simultaneously turned on and the dynamics begins. During the experiment, an intense succession of events occur, with fluid clusters getting trapped and mobilised, merging and breaking apart. This dynamics causes the local saturation in any portion of the medium to fluctuate in time, and this fluctuation is the basic quantity we measure. In figure 2, we see the typical fluid reconfigurations that lead to fluctuations in saturation (images from the machine vision camera focused on the red square part seen in figure 1

b). Figure 2(a) shows two snapshots of the experiment separated by

![]() $10$

s (100 frames). The average flow direction is from left to right. Figure 2(b) shows a composition of the two snapshots where we highlight the portions where fluid reconfiguration has occurred. In green, we see points that were oil-filled and became water-filled, and in magenta, points that were water-filled that became oil-filled. Notice that since the boundaries of the image are all open to the flow, nothing prevents us from having more green or more magenta in the composition image, i.e. the total saturation of each fluid phase can vary. If the saturation of phase

$10$

s (100 frames). The average flow direction is from left to right. Figure 2(b) shows a composition of the two snapshots where we highlight the portions where fluid reconfiguration has occurred. In green, we see points that were oil-filled and became water-filled, and in magenta, points that were water-filled that became oil-filled. Notice that since the boundaries of the image are all open to the flow, nothing prevents us from having more green or more magenta in the composition image, i.e. the total saturation of each fluid phase can vary. If the saturation of phase

![]() $i$

in the region we consider increases by a given amount

$i$

in the region we consider increases by a given amount

![]() $\delta S_i$

, the saturation of the other phase must decrease by the same amount (as

$\delta S_i$

, the saturation of the other phase must decrease by the same amount (as

![]() $S_{w}^{\textit{REV}}+S_{n}^{\textit{REV}}=1$

); however, it is important to remember that the individual values of

$S_{w}^{\textit{REV}}+S_{n}^{\textit{REV}}=1$

); however, it is important to remember that the individual values of

![]() $\delta S_i$

do not need to be zero. In a steady-state situation, we expect the time average of the fluctuations in phase saturation to vanish, i.e.

$\delta S_i$

do not need to be zero. In a steady-state situation, we expect the time average of the fluctuations in phase saturation to vanish, i.e.

![]() $\langle \delta S_i\rangle = 0$

, and the saturation fluctuates around a well defined mean value

$\langle \delta S_i\rangle = 0$

, and the saturation fluctuates around a well defined mean value

![]() $\left \langle {S_i}\right \rangle$

; however, the specific value of

$\left \langle {S_i}\right \rangle$

; however, the specific value of

![]() $\delta S_i$

at a given time does not need to vanish.

$\delta S_i$

at a given time does not need to vanish.

Figure 3. Water phase saturation over time. The main plot shows the full time series of water phase saturation in the analysed region, fluctuating around a mean of

![]() $\langle {S_w}\rangle = 0.48$

. At the 500 s scale, fluctuations appear uncorrelated. The top-left inset (light red) zooms in on fluctuations at the 100 s scale, which also seem uncorrelated. The top-right inset (light green) further magnifies to 10 s, where fluctuations are expected to be highly correlated.

$\langle {S_w}\rangle = 0.48$

. At the 500 s scale, fluctuations appear uncorrelated. The top-left inset (light red) zooms in on fluctuations at the 100 s scale, which also seem uncorrelated. The top-right inset (light green) further magnifies to 10 s, where fluctuations are expected to be highly correlated.

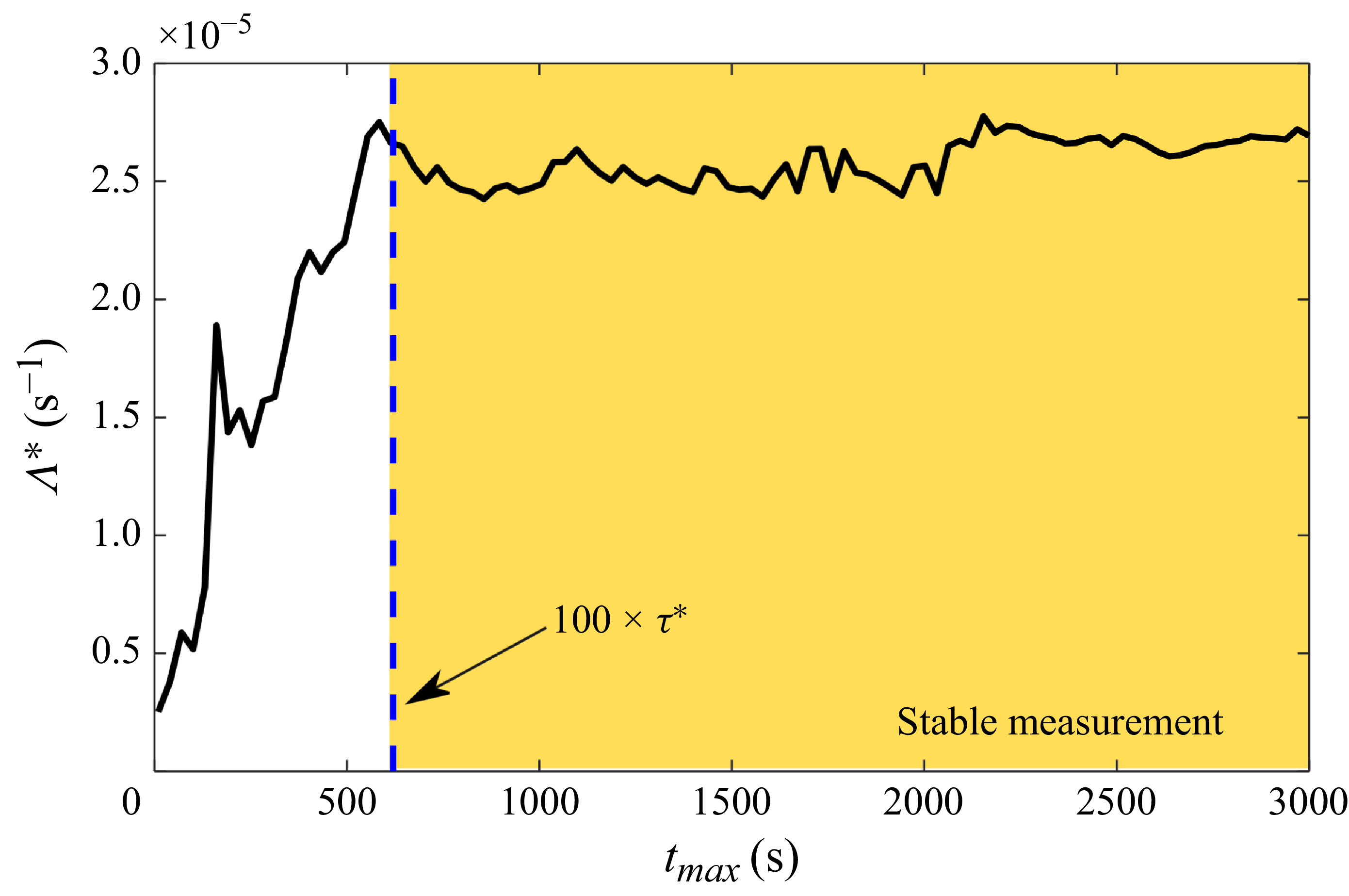

Figure 3 shows a plot of the water saturation in the REV as a function of time for an interval of 3000 s (50 min). In the very beginning of the experiment (not seen in the plot), the model was fully saturated with water (thus having water saturation equal to 1). In the analysis shown here, we waited for a period of approximately 24 min since the beginning, for the saturation to decrease to the steady-state value we see in the plot, in which it fluctuates around the mean value

![]() $\left \langle {S_w}\right \rangle = 0.48$

. We start counting the time at this point, when the system is already in a steady state. In addition to the main plot (with a scale bar showing 500 s), we show two insets. On the top left (light red), we show the typical fluctuations at a scale of 100 s and on the top right (light green), we show the fluctuations at a scale of 10 s. Just from the visual inspection of the plot, we can expect the fluctuations to be uncorrelated on time scales larger than 100 s, but within the 10 s time scale, the fluctuations seem to be strongly correlated. What we mean by that is that if we know that at a given instant the saturation is, say, increasing, we can say that there is a high likelihood that it will also be increasing within the next few seconds, but we cannot say anything about it 100 s into the future.

$\left \langle {S_w}\right \rangle = 0.48$

. We start counting the time at this point, when the system is already in a steady state. In addition to the main plot (with a scale bar showing 500 s), we show two insets. On the top left (light red), we show the typical fluctuations at a scale of 100 s and on the top right (light green), we show the fluctuations at a scale of 10 s. Just from the visual inspection of the plot, we can expect the fluctuations to be uncorrelated on time scales larger than 100 s, but within the 10 s time scale, the fluctuations seem to be strongly correlated. What we mean by that is that if we know that at a given instant the saturation is, say, increasing, we can say that there is a high likelihood that it will also be increasing within the next few seconds, but we cannot say anything about it 100 s into the future.

Our next step in the study of the fluctuation correlations is to consider the derivative of the saturation. Taking a numerical derivative of a strongly fluctuating experimental quantity (as seen in figure 3) can be challenging, particularly for very short time scales in which high-frequency fluctuations (possibly coming from other sources, like fluctuations in illumination or electronic noise) can lead to overestimating the result. To account for that, we have considered a time difference

![]() $\delta t$

for the finite difference used to compute the derivative that is in between the minimum time scale we can resolve of

$\delta t$

for the finite difference used to compute the derivative that is in between the minimum time scale we can resolve of

![]() $0.1$

s (corresponding to the time difference between two frames in the images) and the typical time scale we expect the data to start presenting decorrelation in the signal (which we conservatively estimate as

$0.1$

s (corresponding to the time difference between two frames in the images) and the typical time scale we expect the data to start presenting decorrelation in the signal (which we conservatively estimate as

![]() $10\, \text{s}$

from figure 3). Attending to the condition

$10\, \text{s}$

from figure 3). Attending to the condition

![]() $0.1\, \text{s} \ll \delta t \ll 10\, \text{s}$

, we chose

$0.1\, \text{s} \ll \delta t \ll 10\, \text{s}$

, we chose

![]() $\delta t = 1\, \text{s}$

. Figure 4 shows the resulting plot of the derivative of the water saturation. As expected, this quantity fluctuates around zero, a necessary condition for the application of (3.17). The fluctuations around zero for the saturation derivative are expected from the fact that the experiment is in a statistical steady-state as previously explained. On the inset (light yellow), we see a zoomed-in section indicating the expected fluctuations in the derivative of saturation for a typical time scale of 10 s. We see now more clearly that indeed the decorrelation time seems to be smaller than 10 s. Under these conditions, the system is in a state which can be characterised by microscopic reversibility, a requirement for the use of non-equilibrium thermodynamics.

$\delta t = 1\, \text{s}$

. Figure 4 shows the resulting plot of the derivative of the water saturation. As expected, this quantity fluctuates around zero, a necessary condition for the application of (3.17). The fluctuations around zero for the saturation derivative are expected from the fact that the experiment is in a statistical steady-state as previously explained. On the inset (light yellow), we see a zoomed-in section indicating the expected fluctuations in the derivative of saturation for a typical time scale of 10 s. We see now more clearly that indeed the decorrelation time seems to be smaller than 10 s. Under these conditions, the system is in a state which can be characterised by microscopic reversibility, a requirement for the use of non-equilibrium thermodynamics.

Figure 4. Derivative of water saturation over time. As expected, saturation fluctuations average to zero, a necessary condition for steady state. The inset (light yellow) magnifies a 50 s segment, showing that the signal appears uncorrelated for times longer than 10 s. The vertical band in the main plot marks a sliding window of

![]() $\Delta t^{\textit{sub}} = 100\,\text{s}$

, relevant for the analysis in § 4.4.

$\Delta t^{\textit{sub}} = 100\,\text{s}$

, relevant for the analysis in § 4.4.

We further quantify the fluctuations by looking at their statistics. In figure 5, we show the probability density function (p.d.f.) of the fluctuations

![]() $P(x={\rm d}S/{\rm d}t)$

obtained by computing a histogram of the data in figure 4. The solid line shows a Gaussian fit. The grey shades denote

$P(x={\rm d}S/{\rm d}t)$

obtained by computing a histogram of the data in figure 4. The solid line shows a Gaussian fit. The grey shades denote

![]() $\pm 2 \sigma$

(darker) and

$\pm 2 \sigma$

(darker) and

![]() $\pm 3 \sigma$

(lighter) windows, where

$\pm 3 \sigma$

(lighter) windows, where

![]() $\sigma$

is the standard deviation of the original data. Notice that the curve is essentially centred at

$\sigma$

is the standard deviation of the original data. Notice that the curve is essentially centred at

![]() ${\rm d}S/{\rm d}t = 0$

, another indication that the system is in a steady state and the fluctuations have zero temporal average (we did not subtract the mean value of the fluctuations prior to producing the histogram in figure 5, the zero mean is a natural consequence of the steady-state dynamics). In figure 5(a), we show the data on a linear scale and the Gaussian fit seems to describe the data reasonably well. However, when plotting the probability as a function of the reduced variable sign

${\rm d}S/{\rm d}t = 0$

, another indication that the system is in a steady state and the fluctuations have zero temporal average (we did not subtract the mean value of the fluctuations prior to producing the histogram in figure 5, the zero mean is a natural consequence of the steady-state dynamics). In figure 5(a), we show the data on a linear scale and the Gaussian fit seems to describe the data reasonably well. However, when plotting the probability as a function of the reduced variable sign

![]() $({\rm d}S/{\rm d}t)\boldsymbol{\cdot }({\rm d}S/{\rm d}T)^2$

, see figure 5(b), we notice that the rare events of large saturation changes (with

$({\rm d}S/{\rm d}t)\boldsymbol{\cdot }({\rm d}S/{\rm d}T)^2$

, see figure 5(b), we notice that the rare events of large saturation changes (with

![]() $|{\rm d}S/{\rm d}t|\gt 0.01$

) do not seem to obey the Gaussian statistics (non-Gaussian statistics was also observed by Alfazazi et al. Reference Alfazazi, Bedeaux, Kjelstrup, Moura, Ebadi, Mostaghimi, McClure and Armstrong2024). Although these events are relatively unfrequent (they are approximately 2 standard deviations away from the mean, therefore accounting for less than

$|{\rm d}S/{\rm d}t|\gt 0.01$

) do not seem to obey the Gaussian statistics (non-Gaussian statistics was also observed by Alfazazi et al. Reference Alfazazi, Bedeaux, Kjelstrup, Moura, Ebadi, Mostaghimi, McClure and Armstrong2024). Although these events are relatively unfrequent (they are approximately 2 standard deviations away from the mean, therefore accounting for less than

![]() $5\,\%$

of the total count of events), we believe they could be very significant for the process as those should correspond to the large cluster rearrangements leading to fast changes in saturation. We found however that this result (the apparent deviation from Gaussian statistics) could be sensitive to the interval

$5\,\%$

of the total count of events), we believe they could be very significant for the process as those should correspond to the large cluster rearrangements leading to fast changes in saturation. We found however that this result (the apparent deviation from Gaussian statistics) could be sensitive to the interval

![]() $\delta t$

used in the computation of the derivatives. If

$\delta t$

used in the computation of the derivatives. If

![]() $\delta t$

is choosen too large, the large derivatives are not accurately measured, which removes the tails of the distribution. However, if

$\delta t$

is choosen too large, the large derivatives are not accurately measured, which removes the tails of the distribution. However, if

![]() $\delta t$

is too small, one may inadvertently introduce artefacts from high-frequency fluctuations that may be unrelated to the process in study (for instance, errors coming from fluctuations in light intensity). In the supplementary material available at https://doi.org/10.1017/jfm.2025.10987, we show a sensitivity analysis of our results with regard to the binning procedure (number of bins for the histogram) and the time derivative lag

$\delta t$

is too small, one may inadvertently introduce artefacts from high-frequency fluctuations that may be unrelated to the process in study (for instance, errors coming from fluctuations in light intensity). In the supplementary material available at https://doi.org/10.1017/jfm.2025.10987, we show a sensitivity analysis of our results with regard to the binning procedure (number of bins for the histogram) and the time derivative lag

![]() $\delta t$

. We return to the topic of fluctuation statistics in § 4.6, where we perform the analysis of a series of experiments.

$\delta t$