1 Introduction

Let A be an

![]() $n\times n$

random symmetric matrix whose entries on and above the diagonal

$n\times n$

random symmetric matrix whose entries on and above the diagonal

![]() $(A_{i,j})_{i\leqslant j}$

are independent and identically distributed (i.i.d.) with mean

$(A_{i,j})_{i\leqslant j}$

are independent and identically distributed (i.i.d.) with mean

![]() $0$

and variance

$0$

and variance

![]() $1$

. This matrix model, sometimes called the Wigner matrix ensemble, was introduced in the 1950s in the seminal work of Wigner [Reference Wigner50], who established the famous “semicircular law” for the eigenvalues of such matrices.

$1$

. This matrix model, sometimes called the Wigner matrix ensemble, was introduced in the 1950s in the seminal work of Wigner [Reference Wigner50], who established the famous “semicircular law” for the eigenvalues of such matrices.

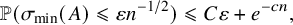

In this paper, we study the extreme behavior of the least singular value of A, which we denote by

![]() $\sigma _{\min }(A)$

. Heuristically, we expect that

$\sigma _{\min }(A)$

. Heuristically, we expect that

![]() $\sigma _{\min }(A) = \Theta (n^{-1/2})$

, and thus it is natural to consider

$\sigma _{\min }(A) = \Theta (n^{-1/2})$

, and thus it is natural to consider

for all

![]() $\varepsilon \geqslant 0$

(see Section 1.2). In this paper, we prove a bound on this quantity which is optimal up to constants, for all random symmetric matrices with i.i.d. subgaussian entries. This confirms the folklore conjecture, explicitly stated by Vershynin in [Reference Vershynin46].

$\varepsilon \geqslant 0$

(see Section 1.2). In this paper, we prove a bound on this quantity which is optimal up to constants, for all random symmetric matrices with i.i.d. subgaussian entries. This confirms the folklore conjecture, explicitly stated by Vershynin in [Reference Vershynin46].

Theorem 1.1. Let

![]() $\zeta $

be a subgaussian random variable with mean

$\zeta $

be a subgaussian random variable with mean

![]() $0$

and variance

$0$

and variance

![]() $1$

, and let A be an

$1$

, and let A be an

![]() $n \times n$

random symmetric matrix whose entries above the diagonal

$n \times n$

random symmetric matrix whose entries above the diagonal

![]() $(A_{i,j})_{i\leqslant j}$

are independent and distributed according to

$(A_{i,j})_{i\leqslant j}$

are independent and distributed according to

![]() $\zeta $

. Then for every

$\zeta $

. Then for every

![]() $\varepsilon \geqslant 0$

,

$\varepsilon \geqslant 0$

,

where

![]() $C,c>0$

depend only on

$C,c>0$

depend only on

![]() $\zeta $

.

$\zeta $

.

This conjecture is sharp up to the value of the constants

![]() $C,c>0$

and resolves the “up-to-constants” analogue of the Spielman–Teng [Reference Spielman and Teng38] conjecture for random symmetric matrices (see Section 1.2). Also note that the special case

$C,c>0$

and resolves the “up-to-constants” analogue of the Spielman–Teng [Reference Spielman and Teng38] conjecture for random symmetric matrices (see Section 1.2). Also note that the special case

![]() $\varepsilon = 0 $

tells us that the singularity probability of any random symmetric A with subgaussian entry distribution is exponentially small, generalizing our previous work [Reference Campos, Jenssen, Michelen and Sahasrabudhe4] on the

$\varepsilon = 0 $

tells us that the singularity probability of any random symmetric A with subgaussian entry distribution is exponentially small, generalizing our previous work [Reference Campos, Jenssen, Michelen and Sahasrabudhe4] on the

![]() $\{-1,1\}$

case.

$\{-1,1\}$

case.

1.1 Repeated eigenvalues

Before we discuss the history of the least singular value problem, we highlight one further contribution of this paper: a proof that a random symmetric matrix has no repeated eigenvalues with probability

![]() $1-e^{-\Omega (n)}$

.

$1-e^{-\Omega (n)}$

.

In the 1980s, Babai [Reference Tao and Vu43] conjectured that the adjacency matrix of the binomial random graph

![]() $G(n,1/2)$

has no repeated eigenvalues with probability

$G(n,1/2)$

has no repeated eigenvalues with probability

![]() $1-o(1)$

(see [Reference Tao and Vu43]). Tao and Vu [Reference Tao and Vu43] proved this conjecture in 2014 and, in subsequent work on the topic with Nguyen [Reference Nguyen, Tao and Vu24], went on to conjecture the probability that a random symmetric matrix with i.i.d. subgaussian entries has no repeated eigenvalues is

$1-o(1)$

(see [Reference Tao and Vu43]). Tao and Vu [Reference Tao and Vu43] proved this conjecture in 2014 and, in subsequent work on the topic with Nguyen [Reference Nguyen, Tao and Vu24], went on to conjecture the probability that a random symmetric matrix with i.i.d. subgaussian entries has no repeated eigenvalues is

![]() $1-e^{-\Omega (n)}$

. In this paper, we prove this conjecture en route to proving Theorem 1.1, our main theorem.

$1-e^{-\Omega (n)}$

. In this paper, we prove this conjecture en route to proving Theorem 1.1, our main theorem.

Theorem 1.2. Let

![]() $\zeta $

be a subgaussian random variable with mean

$\zeta $

be a subgaussian random variable with mean

![]() $0$

and variance

$0$

and variance

![]() $1$

, and let A be an

$1$

, and let A be an

![]() $n \times n$

random symmetric matrix, where

$n \times n$

random symmetric matrix, where

![]() $(A_{i,j})_{i\leqslant j}$

are independent and distributed according to

$(A_{i,j})_{i\leqslant j}$

are independent and distributed according to

![]() $\zeta $

. Then A has no repeated eigenvalues with probability at least

$\zeta $

. Then A has no repeated eigenvalues with probability at least

![]() $1-e^{-cn}$

, where

$1-e^{-cn}$

, where

![]() $c>0$

is a constant depending only on

$c>0$

is a constant depending only on

![]() $\zeta $

.

$\zeta $

.

Theorem 1.2 is easily seen to be sharp whenever

![]() $A_{i,j}$

is discrete: consider the event that three rows of A are identical; this event has probability

$A_{i,j}$

is discrete: consider the event that three rows of A are identical; this event has probability

![]() $e^{-\Theta (n)}$

and results in two

$e^{-\Theta (n)}$

and results in two

![]() $0$

eigenvalues. Also note that the constant in Theorem 1.2 can be made arbitrary small; consider the entry distribution

$0$

eigenvalues. Also note that the constant in Theorem 1.2 can be made arbitrary small; consider the entry distribution

![]() $\zeta $

, which takes value

$\zeta $

, which takes value

![]() $0$

with probability

$0$

with probability

![]() $1-p$

and each of

$1-p$

and each of

![]() $\{-p^{-1/2},p^{-1/2}\}$

with probability

$\{-p^{-1/2},p^{-1/2}\}$

with probability

![]() $p/2$

. Here, the probability of

$p/2$

. Here, the probability of

![]() $0$

being a repeated root is

$0$

being a repeated root is

![]() $\geqslant e^{-(3+o(1))pn}$

.

$\geqslant e^{-(3+o(1))pn}$

.

We in fact prove a more refined version of Theorem 1.2, which gives an upper bound on the probability that two eigenvalues of A fall into an interval of length

![]() $\varepsilon $

. This is the main result of Section 7. For this, we let

$\varepsilon $

. This is the main result of Section 7. For this, we let

![]() $\lambda _1(A)\geqslant \ldots \geqslant \lambda _n(A)$

denote the eigenvalues of the

$\lambda _1(A)\geqslant \ldots \geqslant \lambda _n(A)$

denote the eigenvalues of the

![]() $n\times n$

real symmetric matrix A.

$n\times n$

real symmetric matrix A.

Theorem 1.3. Let

![]() $\zeta $

be a subgaussian random variable with mean

$\zeta $

be a subgaussian random variable with mean

![]() $0$

and variance

$0$

and variance

![]() $1$

, and let A be an

$1$

, and let A be an

![]() $n \times n$

random symmetric matrix, where

$n \times n$

random symmetric matrix, where

![]() $(A_{i,j})_{i\leqslant j}$

are independent and distributed according to

$(A_{i,j})_{i\leqslant j}$

are independent and distributed according to

![]() $\zeta $

. Then for each

$\zeta $

. Then for each

![]() $\ell < cn$

and all

$\ell < cn$

and all

![]() $\varepsilon \geqslant 0$

, we have

$\varepsilon \geqslant 0$

, we have

where

![]() $C,c>0$

are constants, depending only on

$C,c>0$

are constants, depending only on

![]() $\zeta $

.

$\zeta $

.

In the following subsection, we describe the history of the least singular value problem. In Section 1.3, we discuss a technical theme which is developed in this paper, and then, in Section 2, we go on to give a sketch of Theorem 1.1.

1.2 History of the least singular value problem

The behavior of the least singular value was first studied for random matrices

![]() $B_n$

with i.i.d. coefficients, rather than for symmetric random matrices. For this model, the history goes back to von Neumann [Reference Von Neumann48], who suggested that one typically has

$B_n$

with i.i.d. coefficients, rather than for symmetric random matrices. For this model, the history goes back to von Neumann [Reference Von Neumann48], who suggested that one typically has

while studying approximate solutions to linear systems. This was then more rigorously conjectured by Smale [Reference Smale36] and proved by Szarek [Reference Szarek39] and Edelman [Reference Edelman8] in the case that

![]() $B_n = G_n$

is a random matrix with i.i.d. standard gaussian entries. Edelman found an exact expression for the density of the least singular value in this case. By analyzing this expression, one can deduce that

$B_n = G_n$

is a random matrix with i.i.d. standard gaussian entries. Edelman found an exact expression for the density of the least singular value in this case. By analyzing this expression, one can deduce that

for all

![]() $\varepsilon \geqslant 0$

(see, e.g. [Reference Spielman and Teng38]). While this gives a very satisfying understanding of the gaussian case, one encounters serious difficulties when trying to extend this result to other distributions. Indeed, Edelman’s proof relies crucially on an exact description of the joint distribution of eigenvalues that is available in the gaussian setting. In the last 20 or so years, intense study of the least singular value of i.i.d. random matrices has been undertaken with the overall goal of proving an appropriate version of (1.3) for different entry distributions and models of random matrices.

$\varepsilon \geqslant 0$

(see, e.g. [Reference Spielman and Teng38]). While this gives a very satisfying understanding of the gaussian case, one encounters serious difficulties when trying to extend this result to other distributions. Indeed, Edelman’s proof relies crucially on an exact description of the joint distribution of eigenvalues that is available in the gaussian setting. In the last 20 or so years, intense study of the least singular value of i.i.d. random matrices has been undertaken with the overall goal of proving an appropriate version of (1.3) for different entry distributions and models of random matrices.

An important and challenging feature of the more general problem arises in the case of discrete distributions, where the matrix

![]() $B_n$

can become singular with nonzero probability. This singularity event will affect the quantity (1.1) for very small

$B_n$

can become singular with nonzero probability. This singularity event will affect the quantity (1.1) for very small

![]() $\varepsilon $

and thus estimating the probability that

$\varepsilon $

and thus estimating the probability that

![]() $\sigma _{\min }(B_n) = 0$

is a crucial aspect of generalizing (1.3). This is reflected in the famous and influential Spielman–Teng conjecture [Reference Spielman and Teng37] which proposes the bound

$\sigma _{\min }(B_n) = 0$

is a crucial aspect of generalizing (1.3). This is reflected in the famous and influential Spielman–Teng conjecture [Reference Spielman and Teng37] which proposes the bound

where

![]() $B_n$

is a Bernoulli random matrix. Here, this added exponential term “comes from” the singularity probability of

$B_n$

is a Bernoulli random matrix. Here, this added exponential term “comes from” the singularity probability of

![]() $B_n$

. In this direction, a key breakthrough was made by Rudelson [Reference Rudelson30], who proved that if

$B_n$

. In this direction, a key breakthrough was made by Rudelson [Reference Rudelson30], who proved that if

![]() $B_n$

has i.i.d. subgaussian entries, then

$B_n$

has i.i.d. subgaussian entries, then

This result was extended in a series of works [Reference Rudelson and Vershynin32, Reference Tao and Vu40, Reference Tao and Vu44, Reference Vu and Tao49], culminating in the influential work of Rudelson and Vershynin [Reference Rudelson and Vershynin31], who showed the “up-to-constants” version of Spielman-Teng:

where

![]() $B_n$

is a matrix with i.i.d. entries that follow any subgaussian distribution and

$B_n$

is a matrix with i.i.d. entries that follow any subgaussian distribution and

![]() $C,c>0$

depend only on

$C,c>0$

depend only on

![]() $\zeta $

. A key ingredient in the proof of (1.5) is a novel approach to the “inverse Littlewood-Offord problem,” a perspective pioneered by Tao and Vu [Reference Tao and Vu44] (see Section 1.3 for more discussion).

$\zeta $

. A key ingredient in the proof of (1.5) is a novel approach to the “inverse Littlewood-Offord problem,” a perspective pioneered by Tao and Vu [Reference Tao and Vu44] (see Section 1.3 for more discussion).

Another very different approach was taken by Tao and Vu [Reference Tao and Vu41], who showed that the distribution of the least singular value of

![]() $B_n$

is identical to the least singular value of the Gaussian matrix

$B_n$

is identical to the least singular value of the Gaussian matrix

![]() $G_n$

, up to scales of size

$G_n$

, up to scales of size

![]() $n^{-c}$

. In particular, they prove that

$n^{-c}$

. In particular, they prove that

thus resolving the Spielman-Teng conjecture for

![]() $\varepsilon \geqslant n^{-c_0}$

, in a rather strong form. While falling just short of the Spielman-Teng conjecture, the work of Tao and Vu [Reference Tao and Vu41], Rudelson and Vershynin [Reference Rudelson and Vershynin31], and subsequent refinements by Tikhomirov [Reference Tikhomirov45] and Livshyts et al. [Reference Livshyts, Tikhomirov and Vershynin22] (see also [Reference Livshyts21, Reference Rebrova and Tikhomirov29]) leave us with a very strong understanding of the least singular value for i.i.d. matrix models. However, progress on the analogous problem for random symmetric matrices, or Wigner random matrices, has come somewhat more slowly and more recently: In the symmetric case, even proving that

$\varepsilon \geqslant n^{-c_0}$

, in a rather strong form. While falling just short of the Spielman-Teng conjecture, the work of Tao and Vu [Reference Tao and Vu41], Rudelson and Vershynin [Reference Rudelson and Vershynin31], and subsequent refinements by Tikhomirov [Reference Tikhomirov45] and Livshyts et al. [Reference Livshyts, Tikhomirov and Vershynin22] (see also [Reference Livshyts21, Reference Rebrova and Tikhomirov29]) leave us with a very strong understanding of the least singular value for i.i.d. matrix models. However, progress on the analogous problem for random symmetric matrices, or Wigner random matrices, has come somewhat more slowly and more recently: In the symmetric case, even proving that

![]() $A_n$

is nonsingular with probability

$A_n$

is nonsingular with probability

![]() $1-o(1)$

was not resolved until the important 2006 paper of Costello et al. [Reference Costello, Tao and Vu7].

$1-o(1)$

was not resolved until the important 2006 paper of Costello et al. [Reference Costello, Tao and Vu7].

Progress on the symmetric version of Spielman–Teng continued with Nguyen [Reference Nguyen25, Reference Nguyen26] and, independently, Vershynin [Reference Vershynin46]. Nguyen proved that for any

![]() $B>0$

, there exists an

$B>0$

, there exists an

![]() $A>0$

for whichFootnote

1

$A>0$

for whichFootnote

1

Vershynin [Reference Vershynin46] proved that if

![]() $A_n$

is a matrix with subgaussian entries then, for all

$A_n$

is a matrix with subgaussian entries then, for all

![]() $\varepsilon>0$

, we have

$\varepsilon>0$

, we have

for all

![]() $\eta>0$

, where the constants

$\eta>0$

, where the constants

![]() $C_\eta ,c> 0$

may depend on the underlying subgaussian random variable. He went on to conjecture that

$C_\eta ,c> 0$

may depend on the underlying subgaussian random variable. He went on to conjecture that

![]() $\varepsilon $

should replace

$\varepsilon $

should replace

![]() $\varepsilon ^{1/8 - \varepsilon }$

as the correct order of magnitude, and that

$\varepsilon ^{1/8 - \varepsilon }$

as the correct order of magnitude, and that

![]() $e^{-cn}$

should replace

$e^{-cn}$

should replace

![]() $e^{-n^{c}}$

.

$e^{-n^{c}}$

.

After Vershynin, a series of works [Reference Campos, Jenssen, Michelen and Sahasrabudhe3, Reference Campos, Mattos, Morris and Morrison5, Reference Ferber and Jain16, Reference Ferber, Jain, Luh and Samotij17, Reference Jain, Sah and Sawhney19] made progress on singularity probability (i.e., the

![]() $\varepsilon = 0$

case of Vershynin’s conjecture), and we, in [Reference Campos, Jenssen, Michelen and Sahasrabudhe4], ultimately showed that the singularity probability is exponentially small, when

$\varepsilon = 0$

case of Vershynin’s conjecture), and we, in [Reference Campos, Jenssen, Michelen and Sahasrabudhe4], ultimately showed that the singularity probability is exponentially small, when

![]() $A_{i,j}$

is uniform in

$A_{i,j}$

is uniform in

![]() $\{-1,1\}$

:

$\{-1,1\}$

:

which is sharp up to the value of

![]() $c>0$

.

$c>0$

.

However, for general

![]() $\varepsilon $

, the state of the art is due to Jain et al. [Reference Jain, Sah and Sawhney19], who improved on Vershynin’s bound (1.7) by showing

$\varepsilon $

, the state of the art is due to Jain et al. [Reference Jain, Sah and Sawhney19], who improved on Vershynin’s bound (1.7) by showing

under the subgaussian hypothesis on

![]() $A_n$

.

$A_n$

.

For large

![]() $\varepsilon $

, for example,

$\varepsilon $

, for example,

![]() $\varepsilon \geqslant n^{-c}$

, another very different and powerful set of techniques have been developed, which in fact apply more generally to the distribution of other “bulk” eigenvalues and additionally give distributional information on the eigenvalues. The works of Tao and Vu [Reference Tao and Vu40, Reference Tao and Vu42], Erdős, Schlein and Yau [Reference Erdős, Schlein and Yau10, Reference Erdős, Schlein and Yau11, Reference Erdős, Schlein and Yau13], Erdős et al. [Reference Erdős, Ramírez, Schlein, Tao, Vu and Yau9], and specifically, Bourgade et al. [Reference Bourgade, Erdős, Yau and Yin2] tell us that

$\varepsilon \geqslant n^{-c}$

, another very different and powerful set of techniques have been developed, which in fact apply more generally to the distribution of other “bulk” eigenvalues and additionally give distributional information on the eigenvalues. The works of Tao and Vu [Reference Tao and Vu40, Reference Tao and Vu42], Erdős, Schlein and Yau [Reference Erdős, Schlein and Yau10, Reference Erdős, Schlein and Yau11, Reference Erdős, Schlein and Yau13], Erdős et al. [Reference Erdős, Ramírez, Schlein, Tao, Vu and Yau9], and specifically, Bourgade et al. [Reference Bourgade, Erdős, Yau and Yin2] tell us that

thus obtaining the correct dependenceFootnote

2

on

![]() $\varepsilon $

when n is sufficiently large compared to

$\varepsilon $

when n is sufficiently large compared to

![]() $\varepsilon $

. These results are similar in flavor to (1.6) in that they show the distribution of various eigenvalue statistics is closely approximated by the corresponding statistics in the gaussian case. We note, however, that it appears these techniques are limited to these large

$\varepsilon $

. These results are similar in flavor to (1.6) in that they show the distribution of various eigenvalue statistics is closely approximated by the corresponding statistics in the gaussian case. We note, however, that it appears these techniques are limited to these large

![]() $\varepsilon $

and different ideas are required for

$\varepsilon $

and different ideas are required for

![]() $\varepsilon < n^{-C}$

, and certainly for

$\varepsilon < n^{-C}$

, and certainly for

![]() $\varepsilon $

as small as

$\varepsilon $

as small as

![]() $e^{-\Theta (n)}$

.

$e^{-\Theta (n)}$

.

Our main theorem, Theorem 1.1, proves Vershynin’s conjecture and thus proves the optimal dependence on

![]() $\varepsilon $

for all

$\varepsilon $

for all

![]() $\varepsilon> e^{-cn}$

, up to constants.

$\varepsilon> e^{-cn}$

, up to constants.

1.3 Approximate negative correlation

Before we sketch the proof of Theorem 1.1, we highlight a technical theme of this paper: the approximate negative correlation of certain “linear events.” While this is only one of several new ingredients in this paper, we isolate these ideas here, as they seem to be particularly amenable to wider application. We refer the reader to Section 2 for a more complete overview of the new ideas in this paper.

We say that two events

![]() $A,B$

in a probability space are negatively correlated if

$A,B$

in a probability space are negatively correlated if

Here, we state and discuss two approximate negative correlation results: one of which is from our paper [Reference Campos, Jenssen, Michelen and Sahasrabudhe4], but is used in an entirely different context, and one of which is new.

We start by describing the latter result, which says that a “small ball” event is approximately negatively correlated with a large deviation event. This complements our result from [Reference Campos, Jenssen, Michelen and Sahasrabudhe4], which says that two “small ball events,” of different types, are negatively correlated. In particular, we prove something in the spirit of the following inequality, though in a slightly more technical form.

where

![]() $u, v$

are unit vectors and

$u, v$

are unit vectors and

![]() $t,\varepsilon>0$

and

$t,\varepsilon>0$

and

![]() $X = (X_1,\ldots ,X_n)$

with i.i.d. subgaussian random variables with mean

$X = (X_1,\ldots ,X_n)$

with i.i.d. subgaussian random variables with mean

![]() $0$

and variance

$0$

and variance

![]() $1$

.

$1$

.

To state and understand our result, it makes sense to first consider, in isolation, the two events present in (1.9). The easier of the two events is

![]() $\langle X, u \rangle>t$

, which is a large deviation event for which we may apply the essentially sharp and classical inequality (see Chapter 3.4 in [Reference Vershynin47])

$\langle X, u \rangle>t$

, which is a large deviation event for which we may apply the essentially sharp and classical inequality (see Chapter 3.4 in [Reference Vershynin47])

where

![]() $c>0$

is a constant depending only on the distribution of X.

$c>0$

is a constant depending only on the distribution of X.

We now turn to understand the more complicated small-ball event

![]() $|\langle X , v \rangle | \leqslant \varepsilon $

appearing in (1.9). Here, we have a more subtle interaction between v and the distribution of X, and thus we first consider the simplest possible case: when X has i.i.d. standard gaussian entries. Here, one may calculate

$|\langle X , v \rangle | \leqslant \varepsilon $

appearing in (1.9). Here, we have a more subtle interaction between v and the distribution of X, and thus we first consider the simplest possible case: when X has i.i.d. standard gaussian entries. Here, one may calculate

for all

![]() $\varepsilon>0$

, where

$\varepsilon>0$

, where

![]() $C>0$

is an absolute constant. However, as we depart from the case when X is gaussian, a much richer behavior emerges when the vector v admits some “arithmetic structure.” For example, if

$C>0$

is an absolute constant. However, as we depart from the case when X is gaussian, a much richer behavior emerges when the vector v admits some “arithmetic structure.” For example, if

![]() $v = n^{-1/2}(1,\ldots ,1)$

and the

$v = n^{-1/2}(1,\ldots ,1)$

and the

![]() $X_i$

are uniform in

$X_i$

are uniform in

![]() $\{-1,1\}$

, then

$\{-1,1\}$

, then

for any

![]() $0< \varepsilon < n^{-1/2}$

. This, of course, stands in contrast to (1.10) for all

$0< \varepsilon < n^{-1/2}$

. This, of course, stands in contrast to (1.10) for all

![]() $\varepsilon \ll n^{-1/2}$

and suggests that we employ an appropriate measure of the arithmetic structure of v.

$\varepsilon \ll n^{-1/2}$

and suggests that we employ an appropriate measure of the arithmetic structure of v.

For this, we use the notion of the “least common denominator” of a vector, introduced by Rudelson and Vershynin [Reference Rudelson and Vershynin31]. For parameters

![]() $\alpha ,\gamma \in (0,1)$

define the least common denominator (LCD) of

$\alpha ,\gamma \in (0,1)$

define the least common denominator (LCD) of

![]() $v \in \mathbb {R}^n$

to be

$v \in \mathbb {R}^n$

to be

$$ \begin{align} D_{\alpha,\gamma}(v):=\inf\bigg\{\phi>0:~\|\phi v\|_{\mathbb{T}}\leqslant \min\left\{\gamma\phi\|v\|_2, \sqrt{\alpha n}\right\}\bigg\}, \end{align} $$

$$ \begin{align} D_{\alpha,\gamma}(v):=\inf\bigg\{\phi>0:~\|\phi v\|_{\mathbb{T}}\leqslant \min\left\{\gamma\phi\|v\|_2, \sqrt{\alpha n}\right\}\bigg\}, \end{align} $$

where

![]() $ \| v \|_{\mathbb {T}} : = \mathrm {dist}(v,\mathbb {Z}^n)$

, for all

$ \| v \|_{\mathbb {T}} : = \mathrm {dist}(v,\mathbb {Z}^n)$

, for all

![]() $v \in \mathbb {R}^n$

. What makes this definition useful is the important “inverse Littlewood-Offord theorem” of Rudelson and Vershynin [Reference Rudelson and Vershynin31], which tells us (roughly speaking) that one has (1.10) whenever

$v \in \mathbb {R}^n$

. What makes this definition useful is the important “inverse Littlewood-Offord theorem” of Rudelson and Vershynin [Reference Rudelson and Vershynin31], which tells us (roughly speaking) that one has (1.10) whenever

![]() $D_{\alpha ,\gamma }(v) = \Omega (\varepsilon ^{-1})$

. This notion of least common denominator is inspired by Tao and Vu’s introduction and development of “inverse Littlewood-Offord theory,” which is a collection of results guided by the meta-hypothesis: “If

$D_{\alpha ,\gamma }(v) = \Omega (\varepsilon ^{-1})$

. This notion of least common denominator is inspired by Tao and Vu’s introduction and development of “inverse Littlewood-Offord theory,” which is a collection of results guided by the meta-hypothesis: “If

![]() $\mathbb {P}_X( \langle X,v\rangle = 0 )$

is large then v must have structure.” We refer the reader to the paper of Tao and Vu [Reference Tao and Vu44] and the survey of Nguyen and Vu [Reference Nguyen and Vu28] for more background and history on inverse Littlewood-Offord theory and its role in random matrix theory. We may now state our version of (1.9), which uses

$\mathbb {P}_X( \langle X,v\rangle = 0 )$

is large then v must have structure.” We refer the reader to the paper of Tao and Vu [Reference Tao and Vu44] and the survey of Nguyen and Vu [Reference Nguyen and Vu28] for more background and history on inverse Littlewood-Offord theory and its role in random matrix theory. We may now state our version of (1.9), which uses

![]() $D_{\alpha ,\gamma }(v)^{-1}$

as a proxy for

$D_{\alpha ,\gamma }(v)^{-1}$

as a proxy for

![]() $\mathbb {P}(|\langle X, v \rangle | \leqslant \varepsilon )$

.

$\mathbb {P}(|\langle X, v \rangle | \leqslant \varepsilon )$

.

Theorem 1.4. For

![]() $n \in \mathbb {N}$

,

$n \in \mathbb {N}$

,

![]() $\varepsilon ,t>0$

and

$\varepsilon ,t>0$

and

![]() $\alpha ,\gamma \in (0,1)$

, let

$\alpha ,\gamma \in (0,1)$

, let

![]() $v \in {\mathbb {S}}^{n-1}$

satisfy

$v \in {\mathbb {S}}^{n-1}$

satisfy

![]() $D_{\alpha ,\gamma }(v)> C/\varepsilon $

and let

$D_{\alpha ,\gamma }(v)> C/\varepsilon $

and let

![]() $u \in {\mathbb {S}}^{n-1}$

. Let

$u \in {\mathbb {S}}^{n-1}$

. Let

![]() $\zeta $

be a subgaussian random variable, and let

$\zeta $

be a subgaussian random variable, and let

![]() $X \in \mathbb {R}^n$

be a random vector whose coordinates are i.i.d. copies of

$X \in \mathbb {R}^n$

be a random vector whose coordinates are i.i.d. copies of

![]() $\zeta $

. Then

$\zeta $

. Then

where

![]() $C,c>0$

depend only on

$C,c>0$

depend only on

![]() $\gamma $

and the distribution of

$\gamma $

and the distribution of

![]() $\zeta $

.

$\zeta $

.

In fact, we need a significantly more complicated version of this result (Lemma 5.2), where the small-ball event

![]() $|\langle X,v\rangle | \leqslant \varepsilon $

is replaced with a small-ball event of the form

$|\langle X,v\rangle | \leqslant \varepsilon $

is replaced with a small-ball event of the form

where f is a quadratic polynomial in variables

![]() $X_1,\ldots ,X_n$

. The proof of this result is carried out in Section 5 and is an important aspect of this paper. Theorem 1.4 is stated here to illustrate the general flavor of this result, and is not actually used in this paper. We do provide a proof in Appendix 9 for completeness and to suggest further inquiry into inequalities of the form (1.9).

$X_1,\ldots ,X_n$

. The proof of this result is carried out in Section 5 and is an important aspect of this paper. Theorem 1.4 is stated here to illustrate the general flavor of this result, and is not actually used in this paper. We do provide a proof in Appendix 9 for completeness and to suggest further inquiry into inequalities of the form (1.9).

We now turn to discuss our second approximate negative dependence result, which deals with the intersection of two different small ball events. This was originally proved in our paper [Reference Campos, Jenssen, Michelen and Sahasrabudhe4], but is put to a different use here. This result tells us that the events

are approximately negatively correlated, where

![]() $X = (X_1,\ldots ,X_n)$

is a vector with i.i.d. subgaussian entries and

$X = (X_1,\ldots ,X_n)$

is a vector with i.i.d. subgaussian entries and

![]() $w_1,\ldots ,w_k$

are orthonormal. That is, we prove something in the spirit of

$w_1,\ldots ,w_k$

are orthonormal. That is, we prove something in the spirit of

$$\begin{align*}\mathbb{P}_X\bigg(\{ |\langle X, v\rangle| \leqslant \varepsilon \} \cap \bigcap_{i=1}^k \{ |\langle X, w_i \rangle| \ll 1 \}\bigg) \lesssim \mathbb{P}_X\big( |\langle X, v \rangle| \leqslant \varepsilon \big)\mathbb{P}_X\bigg( \bigcap_{i=1}^k \{ |\langle X, w_i \rangle| \ll 1 \}\bigg),\end{align*}$$

$$\begin{align*}\mathbb{P}_X\bigg(\{ |\langle X, v\rangle| \leqslant \varepsilon \} \cap \bigcap_{i=1}^k \{ |\langle X, w_i \rangle| \ll 1 \}\bigg) \lesssim \mathbb{P}_X\big( |\langle X, v \rangle| \leqslant \varepsilon \big)\mathbb{P}_X\bigg( \bigcap_{i=1}^k \{ |\langle X, w_i \rangle| \ll 1 \}\bigg),\end{align*}$$

though in a more technical form.

To understand our result, again, it makes sense to consider the two events in (1.12) in isolation. Since we have already discussed the subtle event

![]() $|\langle X, v \rangle | \leqslant \varepsilon $

, we consider the event on the right of (1.12). Returning to the gaussian case, we note that if X has independent standard gaussian entries, then one may compute directly that

$|\langle X, v \rangle | \leqslant \varepsilon $

, we consider the event on the right of (1.12). Returning to the gaussian case, we note that if X has independent standard gaussian entries, then one may compute directly that

by rotational invariance of the gaussian. Here, the generalization to other random variables is not as subtle, and the well-known Hanson-Wright [Reference Hanson and Wright18] inequality tells us that (1.13) holds more generally when X has general i.i.d. subgaussian entries.

Our innovation in this line is our second “approximate negative correlation theorem,” which allows us to control these two events simultaneously. Again, we use

![]() $D_{\alpha ,\gamma }(v)^{-1}$

as a proxy for

$D_{\alpha ,\gamma }(v)^{-1}$

as a proxy for

![]() $\mathbb {P}(|\langle X,v \rangle | \leqslant \varepsilon )$

.

$\mathbb {P}(|\langle X,v \rangle | \leqslant \varepsilon )$

.

Here, for ease of exposition, we state a less general version for

![]() $X = (X_1,\ldots ,X_n) \in \{-1,0,1\}$

with i.i.d. c-lazy coordinates, meaning that

$X = (X_1,\ldots ,X_n) \in \{-1,0,1\}$

with i.i.d. c-lazy coordinates, meaning that

![]() $\mathbb {P}(X_i = 0) \geqslant 1-c$

. Our theorem is stated in full generality in Section 9 (see Theorem 9.2).

$\mathbb {P}(X_i = 0) \geqslant 1-c$

. Our theorem is stated in full generality in Section 9 (see Theorem 9.2).

Theorem 1.5. Let

![]() $\gamma \in (0,1)$

,

$\gamma \in (0,1)$

,

![]() $d \in \mathbb {N}$

,

$d \in \mathbb {N}$

,

![]() $\alpha \in (0,1)$

,

$\alpha \in (0,1)$

,

![]() $0\leqslant k \leqslant c_1 \alpha d$

, and

$0\leqslant k \leqslant c_1 \alpha d$

, and

![]() $\varepsilon \geqslant \exp (-c_1\alpha d)$

. Let

$\varepsilon \geqslant \exp (-c_1\alpha d)$

. Let

![]() $v \in {\mathbb {S}}^{d-1}$

, let

$v \in {\mathbb {S}}^{d-1}$

, let

![]() $w_1,\ldots ,w_k \in {\mathbb {S}}^{d-1}$

be orthogonal, and let W be the matrix with rows

$w_1,\ldots ,w_k \in {\mathbb {S}}^{d-1}$

be orthogonal, and let W be the matrix with rows

![]() $w_1,\ldots ,w_k$

.

$w_1,\ldots ,w_k$

.

If

![]() $X \in \{-1,0,1 \}^d$

is a

$X \in \{-1,0,1 \}^d$

is a

![]() $1/4$

-lazy random vector and

$1/4$

-lazy random vector and

![]() $D_{\alpha ,\gamma }(v)> 16/\varepsilon $

, then

$D_{\alpha ,\gamma }(v)> 16/\varepsilon $

, then

where

![]() $C,c_1,c_2>0$

are constants, depending only on

$C,c_1,c_2>0$

are constants, depending only on

![]() $\gamma $

.

$\gamma $

.

In this paper, we will put Theorem 1.5 to a very different use than to that in [Reference Campos, Jenssen, Michelen and Sahasrabudhe4], where we used it to prove a version of the following statement.

Let

![]() $v \in {\mathbb {S}}^{d-1}$

be a vector on the sphere, and let H be an

$v \in {\mathbb {S}}^{d-1}$

be a vector on the sphere, and let H be an

![]() $n \times d$

random

$n \times d$

random

![]() $\{-1,0,1\}$

-matrix conditioned on the event

$\{-1,0,1\}$

-matrix conditioned on the event

![]() $\|Hv\|_2 \leqslant \varepsilon n^{1/2}$

, for some

$\|Hv\|_2 \leqslant \varepsilon n^{1/2}$

, for some

![]() $\varepsilon> e^{-cn}$

. Here,

$\varepsilon> e^{-cn}$

. Here,

![]() $d = cn$

and

$d = cn$

and

![]() $c>0$

is a sufficiently small constant. Then the probability that the rank of H is

$c>0$

is a sufficiently small constant. Then the probability that the rank of H is

![]() $n-k$

is

$n-k$

is

![]() $\leqslant e^{-ckn}$

.

$\leqslant e^{-ckn}$

.

In this paper, we use (the generalization of) Theorem 1.5 to obtain good bounds on quantities of the form

where B is a fixed matrix with an exceptionally large eigenvalue (possibly as large as

![]() $e^{cn}$

), but is otherwise pseudo-random, meaning (among other things) that the rest of the spectrum does not deviate too much from that of a random matrix. We use Theorem 1.5 to decouple the interaction of X with the largest eigenvector of B, from the interaction of X with the rest of B. We refer the reader to (2.10) in the sketch in Section 2 and to Section 9 for more details.

$e^{cn}$

), but is otherwise pseudo-random, meaning (among other things) that the rest of the spectrum does not deviate too much from that of a random matrix. We use Theorem 1.5 to decouple the interaction of X with the largest eigenvector of B, from the interaction of X with the rest of B. We refer the reader to (2.10) in the sketch in Section 2 and to Section 9 for more details.

The proof of Theorem 9.2 follows closely along the lines of the proof of Theorem 1.5 from [Reference Campos, Jenssen, Michelen and Sahasrabudhe4], requiring only technical modifications and adjustments. So as not to distract from the new ideas of this paper, we have sidelined this proof to the Appendix.

Finally, we note that it may be interesting to investigate these approximate negative correlation results in their own right, and investigate to what extent they can be sharpened.

2 Proof sketch

Here, we sketch the proof of Theorem 1.1. We begin by giving the rough “shape” of the proof, while making a few simplifying assumptions, (2.2) and (2.3). We shall then come to discuss the substantial new ideas of this paper in Section 2.2, where we describe the considerable lengths we must go to in order to remove our simplifying assumptions. Indeed, if one were to only tackle these assumptions using standard tools, one cannot hope for a bound much better than

![]() $\varepsilon ^{1/3}$

in Theorem 1.1 (see Section 2.2.2).

$\varepsilon ^{1/3}$

in Theorem 1.1 (see Section 2.2.2).

2.1 The shape of the proof

Recall that

![]() $A_{n+1}$

is a

$A_{n+1}$

is a

![]() $(n+1)\times (n+1)$

random symmetric matrix with subgaussian entries. Let

$(n+1)\times (n+1)$

random symmetric matrix with subgaussian entries. Let

![]() ${X := X_1,\ldots ,X_{n+1}}$

be the columns of

${X := X_1,\ldots ,X_{n+1}}$

be the columns of

![]() $A_{n+1}$

, let

$A_{n+1}$

, let

and let

![]() $A_n$

be the matrix

$A_n$

be the matrix

![]() $A_{n+1}$

with the first row and column removed. We now use an important observation from Rudelson and Vershynin [Reference Rudelson and Vershynin31] that allows for a geometric perspective on the least singular value problemFootnote

3

$A_{n+1}$

with the first row and column removed. We now use an important observation from Rudelson and Vershynin [Reference Rudelson and Vershynin31] that allows for a geometric perspective on the least singular value problemFootnote

3

Here, our first significant challenge presents itself: X and V are not independent, and thus the event

![]() $\mathrm {dist}(X,V) \leqslant \varepsilon $

is hard to understand directly. However, one can establish a formula for

$\mathrm {dist}(X,V) \leqslant \varepsilon $

is hard to understand directly. However, one can establish a formula for

![]() $\mathrm {dist}(X,V)$

that is a rational function in the vector X with coefficients that depend only on V. This brings us to the useful inequalityFootnote

4

due to Vershynin [Reference Vershynin46],

$\mathrm {dist}(X,V)$

that is a rational function in the vector X with coefficients that depend only on V. This brings us to the useful inequalityFootnote

4

due to Vershynin [Reference Vershynin46],

where we are ignoring the possibility of

![]() $A_n$

being singular for now. We thus arrive at our main technical focus of this paper, bounding the quantity on the right-hand side of (2.1).

$A_n$

being singular for now. We thus arrive at our main technical focus of this paper, bounding the quantity on the right-hand side of (2.1).

We now make our two simplifying assumptions that shall allow us to give the overall shape of our proof without any added complexity. We shall then layer-on further complexities as we discuss how to remove these assumptions.

As a first simplifying assumption, let us assume that the collection of X that dominates the probability at (2.1) satisfies

This is not, at first blush, an unreasonable assumption to make as

![]() $\mathbb {E}_X\, \|A_n^{-1}X\|_2^2 = \|A_n^{-1}\|_{\mathrm {HS}}^2 $

. Indeed, the Hanson-Wright inequality tells us that

$\mathbb {E}_X\, \|A_n^{-1}X\|_2^2 = \|A_n^{-1}\|_{\mathrm {HS}}^2 $

. Indeed, the Hanson-Wright inequality tells us that

![]() $ \|A_n^{-1}X\|_2 $

is concentrated about its mean, for all reasonable

$ \|A_n^{-1}X\|_2 $

is concentrated about its mean, for all reasonable

![]() $A_n^{-1}$

. However, as we will see, this concentration is not strong enough for us here.

$A_n^{-1}$

. However, as we will see, this concentration is not strong enough for us here.

As a second assumption, we assume that the relevant matrices

![]() $A_n$

in the right-hand side of (2.1) satisfy

$A_n$

in the right-hand side of (2.1) satisfy

This turns out to be a very delicate assumption, as we will soon see, but is not entirely unreasonable to make for the moment: for example, we have

![]() $\|A_n^{-1}\|_{\mathrm {HS}} = \Theta _{\delta }(n^{1/2})$

with probability

$\|A_n^{-1}\|_{\mathrm {HS}} = \Theta _{\delta }(n^{1/2})$

with probability

![]() $1-\delta $

. This, for example, follows from Vershynin’s theorem [Reference Vershynin46] along with Corollary 8.4, which is based on the work of [Reference Erdős, Schlein and Yau13].

$1-\delta $

. This, for example, follows from Vershynin’s theorem [Reference Vershynin46] along with Corollary 8.4, which is based on the work of [Reference Erdős, Schlein and Yau13].

With these assumptions, we return to (2.1) and obverse our task has reduced to proving

for all

![]() $\varepsilon> e^{-cn}$

, where we have written

$\varepsilon> e^{-cn}$

, where we have written

![]() $A^{-1} = A_{n}^{-1}$

and think of

$A^{-1} = A_{n}^{-1}$

and think of

![]() $A^{-1}$

as a fixed (pseudo-random) matrix.

$A^{-1}$

as a fixed (pseudo-random) matrix.

We observe, for a general fixed matrix

![]() $A^{-1}$

, there is no hope in proving such an inequality: Indeed, if

$A^{-1}$

, there is no hope in proving such an inequality: Indeed, if

![]() $A^{-1} = n^{-1/2}J$

, where J is the all-ones matrix, then the left-hand side of (2.4) is

$A^{-1} = n^{-1/2}J$

, where J is the all-ones matrix, then the left-hand side of (2.4) is

![]() $\geqslant cn^{-1/2}$

for all

$\geqslant cn^{-1/2}$

for all

![]() $\varepsilon>0$

, falling vastly short of our desired (2.4).

$\varepsilon>0$

, falling vastly short of our desired (2.4).

Thus, we need to introduce a collection of fairly strong “quasi-randomness properties” of A that hold with, probably

![]() $1-e^{-cn}$

. These will ensure that

$1-e^{-cn}$

. These will ensure that

![]() $A^{-1}$

is sufficiently “non-structured” to make our goal (2.4) possible. The most important and difficult of these quasi-randomness conditions is to show that the eigenvectors v of A satisfy

$A^{-1}$

is sufficiently “non-structured” to make our goal (2.4) possible. The most important and difficult of these quasi-randomness conditions is to show that the eigenvectors v of A satisfy

for some appropriate

![]() $\alpha ,\gamma $

, where

$\alpha ,\gamma $

, where

![]() $D_{\alpha ,\gamma }(v)$

is the least common denominator of v defined at (1.11). Roughly, this means that none of the eigenvectors of A “correlate” with a rescaled copy of the integer lattice

$D_{\alpha ,\gamma }(v)$

is the least common denominator of v defined at (1.11). Roughly, this means that none of the eigenvectors of A “correlate” with a rescaled copy of the integer lattice

![]() $t\mathbb {Z}^n$

, for any

$t\mathbb {Z}^n$

, for any

![]() $e^{-cn} \leqslant t \leqslant 1$

.

$e^{-cn} \leqslant t \leqslant 1$

.

To prove that these quasi-randomness properties hold with probability

![]() $1-e^{-cn}$

is a difficult task and depends fundamentally on the ideas in our previous paper [Reference Campos, Jenssen, Michelen and Sahasrabudhe4]. Since we don’t want these ideas to distract from the new ideas in this paper, we have opted to carry out the details in the Appendix. With these quasi-randomness conditions in tow, we can return to (2.4) and apply Esseen’s inequality to bound the left-hand side of (2.4) in terms of the characteristic function

$1-e^{-cn}$

is a difficult task and depends fundamentally on the ideas in our previous paper [Reference Campos, Jenssen, Michelen and Sahasrabudhe4]. Since we don’t want these ideas to distract from the new ideas in this paper, we have opted to carry out the details in the Appendix. With these quasi-randomness conditions in tow, we can return to (2.4) and apply Esseen’s inequality to bound the left-hand side of (2.4) in terms of the characteristic function

![]() ${\varphi }({\theta })$

of the random variable

${\varphi }({\theta })$

of the random variable

![]() $\langle A^{-1}X, X \rangle $

,

$\langle A^{-1}X, X \rangle $

,

$$\begin{align*}\min_r \mathbb{P}_{X}\big( |\langle A^{-1}X, X \rangle - r| \leqslant \varepsilon n^{1/2} \big) \lesssim \varepsilon \int_{-1/\varepsilon}^{1/\varepsilon} |{\varphi}({\theta})| \, d\theta. \end{align*}$$

$$\begin{align*}\min_r \mathbb{P}_{X}\big( |\langle A^{-1}X, X \rangle - r| \leqslant \varepsilon n^{1/2} \big) \lesssim \varepsilon \int_{-1/\varepsilon}^{1/\varepsilon} |{\varphi}({\theta})| \, d\theta. \end{align*}$$

While this maneuver has been quite successful in work on characteristic functions for (linear) sums of independent random variables, the characteristic function of such quadratic functions has proved to be a more elusive object. For example, even the analogue of the Littlewood-Offord theorem is not fully understood in the quadratic case [Reference Costello6, Reference Meka, Nguyen and Vu23]. Here, we appeal to our quasi-random conditions to avoid some of the traditional difficulties: we use an application of Jensen’s inequality to decouple the quadratic form and bound

![]() ${\varphi }({\theta })$

pointwise in terms of an average over a related collection of characteristic functions of linear sums of independent random variables

${\varphi }({\theta })$

pointwise in terms of an average over a related collection of characteristic functions of linear sums of independent random variables

where Y is a random vector with i.i.d. entries and

![]() ${\varphi }(v; {\theta })$

denotes the characteristic function of the sum

${\varphi }(v; {\theta })$

denotes the characteristic function of the sum

![]() $\sum _{i} v_iX_i$

, where

$\sum _{i} v_iX_i$

, where

![]() $X_i$

are i.i.d. distributed according to the original distribution

$X_i$

are i.i.d. distributed according to the original distribution

![]() $\zeta $

. We can then use our pseudo-random conditions on A to bound

$\zeta $

. We can then use our pseudo-random conditions on A to bound

for all but exponentially few Y, allowing us to show

$$\begin{align*}\int_{-1/\varepsilon}^{1/\varepsilon} |{\varphi}({\theta})| \, d\theta \leqslant \int_{-1/\varepsilon}^{1/\varepsilon} \left[ \mathbb{E}_{Y} |{\varphi}(A^{-1}Y; {\theta})| \right]^{1/2} \leqslant \int_{-1/\varepsilon}^{1/\varepsilon} \left(\exp\left( -c{\theta}^{2} \right) + e^{-cn}\right)\, d{\theta} = O(1) \end{align*}$$

$$\begin{align*}\int_{-1/\varepsilon}^{1/\varepsilon} |{\varphi}({\theta})| \, d\theta \leqslant \int_{-1/\varepsilon}^{1/\varepsilon} \left[ \mathbb{E}_{Y} |{\varphi}(A^{-1}Y; {\theta})| \right]^{1/2} \leqslant \int_{-1/\varepsilon}^{1/\varepsilon} \left(\exp\left( -c{\theta}^{2} \right) + e^{-cn}\right)\, d{\theta} = O(1) \end{align*}$$

and thus completing the proof, up to our simplifying assumptions.

2.2 Removing the simplifying assumptions

While this is a good story to work with, the challenge starts when we turn to remove our simplifying assumptions (2.2), (2.3). We also note that if one only applies standard methods to remove these assumptions, then one would get stuck at the “base case” outlined below. We start by discussing how to remove the simplifying assumption (2.3), whose resolution governs the overall structure of the paper.

2.2.1 Removing the assumption (2.3)

What is most concerning about making the assumption

![]() $\|A_n^{-1}\|_{\mathrm {HS}} \approx n^{-1/2}$

is that it is, in a sense, circular: If we assume the modest-looking hypothesis

$\|A_n^{-1}\|_{\mathrm {HS}} \approx n^{-1/2}$

is that it is, in a sense, circular: If we assume the modest-looking hypothesis

![]() $\mathbb {E}\, \|A^{-1}\|_{\mathrm {HS}} \lesssim n^{1/2}$

, we would be able to deduce

$\mathbb {E}\, \|A^{-1}\|_{\mathrm {HS}} \lesssim n^{1/2}$

, we would be able to deduce

by Markov. In other words, showing that

![]() $\|A^{-1}\|_{\mathrm {HS}}$

is concentrated about

$\|A^{-1}\|_{\mathrm {HS}}$

is concentrated about

![]() $n^{-1/2}$

(in the above sense) actually implies Theorem 1.1. However, this is not as worrisome as it appears at first. Indeed, if we are trying to prove Theorem 1.1 for

$n^{-1/2}$

(in the above sense) actually implies Theorem 1.1. However, this is not as worrisome as it appears at first. Indeed, if we are trying to prove Theorem 1.1 for

![]() $(n+1) \times (n+1)$

matrices using the above outline, we only need to control the Hilbert-Schmidt norm of the inverse of the minor

$(n+1) \times (n+1)$

matrices using the above outline, we only need to control the Hilbert-Schmidt norm of the inverse of the minor

![]() $A_n^{-1}$

. This suggests an inductive or (as we use) an iterative “bootstrapping argument” to successively improve the bound. Thus, in effect, we look to prove

$A_n^{-1}$

. This suggests an inductive or (as we use) an iterative “bootstrapping argument” to successively improve the bound. Thus, in effect, we look to prove

for successively larger

![]() $\alpha \in (0,1]$

. Note, we have to cut out the event of A being singular from our expectation, as this event has nonzero probability.

$\alpha \in (0,1]$

. Note, we have to cut out the event of A being singular from our expectation, as this event has nonzero probability.

2.2.2 Base case

In the first step of our iteration, we prove a “base case” of

without the assumption (2.3) which is equivalent to

To prove this “base case,” we upgrade (2.1) to

$$ \begin{align} \mathbb{P}\left(\sigma_{\min}(A_{n+1}) \leqslant \varepsilon n^{-1/2} \right) \lesssim \varepsilon + \sup_{r \in \mathbb{R}}\, \mathbb{P}\left(\frac{|\langle A_n^{-1}X, X\rangle - r|}{ \|A_n^{-1} X \|_2} \leqslant C \varepsilon , \|A_{n}^{-1}\|_{\mathrm{HS}} \leqslant \frac{n^{1/2}}{\varepsilon} \right) \,.\end{align} $$

$$ \begin{align} \mathbb{P}\left(\sigma_{\min}(A_{n+1}) \leqslant \varepsilon n^{-1/2} \right) \lesssim \varepsilon + \sup_{r \in \mathbb{R}}\, \mathbb{P}\left(\frac{|\langle A_n^{-1}X, X\rangle - r|}{ \|A_n^{-1} X \|_2} \leqslant C \varepsilon , \|A_{n}^{-1}\|_{\mathrm{HS}} \leqslant \frac{n^{1/2}}{\varepsilon} \right) \,.\end{align} $$

In other words, we can intersect with the event

at a loss of only

![]() $C\varepsilon $

in probability.

$C\varepsilon $

in probability.

We then push through the proof outlined in Section 2.1 to obtain our initial weak bound of (2.5). For this, we first use the Hanson-Wright inequality to give a weak version of (2.2), and then use (2.7) as a weak version of our assumption (2.3). We note that this base step (2.5) already improves the best known bounds on the least singular value problem for random symmetric matrices.

2.2.3 Bootstrapping

To improve on this bound, we use a “bootstrapping” lemma which, after applying it three times, allows us to improve (2.5) to the near-optimal result

Proving this bootstrapping lemma essentially reduces to the problem of getting good estimates on

where A is a matrix with

![]() $\|A^{-1}\|_{op} = \delta ^{-1}$

and

$\|A^{-1}\|_{op} = \delta ^{-1}$

and

![]() $ \delta \in (\varepsilon , c n^{-1/2})$

but is “otherwise pseudo-random.” Here, we require two additional ingredients.

$ \delta \in (\varepsilon , c n^{-1/2})$

but is “otherwise pseudo-random.” Here, we require two additional ingredients.

To start unpacking (2.9), we use that

![]() $\|A^{-1}\|_{op} = \delta ^{-1}$

to see that if v is a unit eigenvector corresponding to the largest eigenvalue of

$\|A^{-1}\|_{op} = \delta ^{-1}$

to see that if v is a unit eigenvector corresponding to the largest eigenvalue of

![]() $A^{-1}$

, then

$A^{-1}$

, then

While this leads to a decent first bound of

![]() $O(\delta s)$

on the probability (2.9) (after using the quasi-randomness properties of A), however, this is not enough for our purposes, and in fact, we have to use the additional information that X must also have small inner product with many other eigenvectors of A (assuming s is sufficiently small). Working along these lines, we show that (2.9) is bounded above by

$O(\delta s)$

on the probability (2.9) (after using the quasi-randomness properties of A), however, this is not enough for our purposes, and in fact, we have to use the additional information that X must also have small inner product with many other eigenvectors of A (assuming s is sufficiently small). Working along these lines, we show that (2.9) is bounded above by

$$ \begin{align} \mathbb{P}_X\bigg( |\langle X, v_1 \rangle| \leqslant s \delta \text{ and } |\langle X, v_i\rangle| \leqslant \sigma_i s \text{ for all } i =2,\dots, n-1 \bigg), \end{align} $$

$$ \begin{align} \mathbb{P}_X\bigg( |\langle X, v_1 \rangle| \leqslant s \delta \text{ and } |\langle X, v_i\rangle| \leqslant \sigma_i s \text{ for all } i =2,\dots, n-1 \bigg), \end{align} $$

where

![]() $w_i$

is a unit eigenvector of A corresponding to the singular value

$w_i$

is a unit eigenvector of A corresponding to the singular value

![]() $\sigma _i = \sigma _i(A)$

. Now, appealing to the quasi-random properties of the eigenvectors of

$\sigma _i = \sigma _i(A)$

. Now, appealing to the quasi-random properties of the eigenvectors of

![]() $A^{-1}$

, we may apply our approximate negative correlation theorem (Theorem 1.5) to see that (2.10) is at most

$A^{-1}$

, we may apply our approximate negative correlation theorem (Theorem 1.5) to see that (2.10) is at most

where

![]() $c>0$

is a constant and

$c>0$

is a constant and

![]() $N_{A}(a,b)$

denotes the number of eigenvalues of the matrix A in the interval

$N_{A}(a,b)$

denotes the number of eigenvalues of the matrix A in the interval

![]() $(a,b)$

. The first

$(a,b)$

. The first

![]() $O(\delta s)$

factor comes from the event

$O(\delta s)$

factor comes from the event

![]() $|\langle X, v_1 \rangle | \leqslant s\delta $

, and the second factor comes from approximating

$|\langle X, v_1 \rangle | \leqslant s\delta $

, and the second factor comes from approximating

This bound is now sufficiently strong for our purposes, provided the spectrum of A adheres sufficiently closely to the typical spectrum of

![]() $A_n$

. This now leads us to understand the rest of the spectrum of

$A_n$

. This now leads us to understand the rest of the spectrum of

![]() $A_n$

and, in particular, the next smallest singular values

$A_n$

and, in particular, the next smallest singular values

![]() $\sigma _{n-1},\sigma _{n-2},\ldots $

.

$\sigma _{n-1},\sigma _{n-2},\ldots $

.

Now, this might seem like a step in the wrong direction, as we are now led to understand the behavior of many singular values and not just the smallest. However, this “loss” is outweighed by the fact that we need only to understand these eigenvalues on scales of size

![]() $\Omega ( n^{-1/2} )$

, which is now well understood due to the important work of Erdős et al. [Reference Erdős, Schlein and Yau13].

$\Omega ( n^{-1/2} )$

, which is now well understood due to the important work of Erdős et al. [Reference Erdős, Schlein and Yau13].

These results ultimately allow us to derive sufficiently strong results on quantities of the form (2.9), which, in turn, allow us to prove our “bootstrapping lemma.” We then use this lemma to prove the near-optimal result

2.2.4 Removing the assumption (2.2) and the last jump to Theorem 1.1

We now turn to discuss how to remove our simplifying assumption (2.2), made above, which will allow us to close the gap between (2.13) and Theorem 1.1.

To achieve this, we need to consider how

![]() $\|A^{-1}X\|_2$

varies about

$\|A^{-1}X\|_2$

varies about

![]() $\|A^{-1}\|_{\mathrm {HS}}$

, where we are, again, thinking of

$\|A^{-1}\|_{\mathrm {HS}}$

, where we are, again, thinking of

![]() $A^{-1} = A_{n}^{-1}$

as a sufficiently quasi-random matrix. Now, the Hanson-Wright inequality tells us that, indeed,

$A^{-1} = A_{n}^{-1}$

as a sufficiently quasi-random matrix. Now, the Hanson-Wright inequality tells us that, indeed,

![]() $\|A^{-1}X \|_2$

is concentrated about

$\|A^{-1}X \|_2$

is concentrated about

![]() $\|A^{-1} \|_{\mathrm {HS}}$

, on a scale

$\|A^{-1} \|_{\mathrm {HS}}$

, on a scale

![]() $ \lesssim \|A^{-1}\|_{op}$

. While this is certainly useful for us, it is far from enough to prove Theorem 1.1. For this, we need to rule out any “macroscopic” correlation between the events

$ \lesssim \|A^{-1}\|_{op}$

. While this is certainly useful for us, it is far from enough to prove Theorem 1.1. For this, we need to rule out any “macroscopic” correlation between the events

for all

![]() $K> 0$

. Our first step toward understanding (2.14) is to replace the quadratic large deviation event

$K> 0$

. Our first step toward understanding (2.14) is to replace the quadratic large deviation event

![]() $\|A^{-1}X\|_2> K\|A^{-1}\|_{\mathrm {HS}} $

with a collection of linear large deviation events:

$\|A^{-1}X\|_2> K\|A^{-1}\|_{\mathrm {HS}} $

with a collection of linear large deviation events:

where

![]() $w_n,w_{n-1},\ldots ,w_1$

are the eigenvectors of A corresponding to singular values

$w_n,w_{n-1},\ldots ,w_1$

are the eigenvectors of A corresponding to singular values

![]() $\sigma _n \leqslant \sigma _{n-1} \leqslant \ldots \leqslant \sigma _1$

, respectively, and the

$\sigma _n \leqslant \sigma _{n-1} \leqslant \ldots \leqslant \sigma _1$

, respectively, and the

![]() $\log (i+1)$

factor should be seen as a weight function that assigns more weight to the smaller singular values.

$\log (i+1)$

factor should be seen as a weight function that assigns more weight to the smaller singular values.

Interestingly, we run into a similar obstacle as before: If the “bulk” of the spectrum of

![]() $A^{-1}$

is sufficiently erratic, this replacement step will be too lossy for our purposes. Thus, we are led to prove another result, showing that we may assume that the spectrum of

$A^{-1}$

is sufficiently erratic, this replacement step will be too lossy for our purposes. Thus, we are led to prove another result, showing that we may assume that the spectrum of

![]() $A^{-1}$

adheres sufficiently to the typical spectrum of

$A^{-1}$

adheres sufficiently to the typical spectrum of

![]() $A_n$

. This reduces to proving

$A_n$

. This reduces to proving

$$\begin{align*}\mathbb{E}_{A_n}\, \left[\frac{ \sum_{i=1}^n \sigma_{n-i-1}^{-2} (\log i )^2}{ \sum_{i=1}^n \sigma_{n-i-1}^{-2} } \right] = O(1) ,\end{align*}$$

$$\begin{align*}\mathbb{E}_{A_n}\, \left[\frac{ \sum_{i=1}^n \sigma_{n-i-1}^{-2} (\log i )^2}{ \sum_{i=1}^n \sigma_{n-i-1}^{-2} } \right] = O(1) ,\end{align*}$$

where the left-hand side is a statistic which measures the degree of distortion of the smallest singular values of

![]() $A_n$

. To prove this, we again lean on the work of Erdős et al. [Reference Erdős, Schlein and Yau13].

$A_n$

. To prove this, we again lean on the work of Erdős et al. [Reference Erdős, Schlein and Yau13].

Thus, we have reduced the task of proving the approximate independence of the events at (2.14) to proving the approximate independence of the collection of events

This is something, it turns out, that we can handle on the Fourier side by using a quadratic analogue of our negative correlation inequality, Theorem 1.4. The idea, here, is to prove an Esseen-type bound of the form

$$ \begin{align} \mathbb{P}( |\langle A^{-1} X, X \rangle - t| < \delta, \langle X,u \rangle \geqslant s ) \lesssim \delta e^{-s}\int_{-1/\delta}^{1/\delta} \left|\mathbb{E} e^{2\pi i \theta \langle A^{-1} X, X \rangle + \langle X,u \rangle }\right|\,d\theta\,.\end{align} $$

$$ \begin{align} \mathbb{P}( |\langle A^{-1} X, X \rangle - t| < \delta, \langle X,u \rangle \geqslant s ) \lesssim \delta e^{-s}\int_{-1/\delta}^{1/\delta} \left|\mathbb{E} e^{2\pi i \theta \langle A^{-1} X, X \rangle + \langle X,u \rangle }\right|\,d\theta\,.\end{align} $$

Which introduces this extra “exponential tilt” to the characteristic function. From here, one can carry out the plan sketched in Section 2.1 with this more complicated version of Esseen, then integrate over s to upgrade (2.13) to Theorem 1.1.

2.3 Outline of the rest of the paper

In the next short section, we introduce some key definitions, notation, and preliminaries that we use throughout the paper. In Section 4, we establish a collection of crucial quasi-randomness properties that hold for the random symmetric matrix

![]() $A_n$

with probability

$A_n$

with probability

![]() $1-e^{-\Omega (n)}$

. We shall condition on these events for most of the paper. In Section 5, we detail our Fourier decoupling argument and establish an inequality of the form (2.15). This allows us to prove our new approximate negative correlation result Lemma 5.2. In Section 6, we prepare the ground for our iterative argument by establishing (2.6), thereby switching our focus to the study of the quadratic form

$1-e^{-\Omega (n)}$

. We shall condition on these events for most of the paper. In Section 5, we detail our Fourier decoupling argument and establish an inequality of the form (2.15). This allows us to prove our new approximate negative correlation result Lemma 5.2. In Section 6, we prepare the ground for our iterative argument by establishing (2.6), thereby switching our focus to the study of the quadratic form

![]() $\langle A_n^{-1}X, X\rangle $

. In Section 7, we prove Theorem 1.2 and Theorem 1.3, which tell us that the eigenvalues of A cannot “crowd” small intervals. In Section 8, we establish regularity properties for the bulk of the spectrum of

$\langle A_n^{-1}X, X\rangle $

. In Section 7, we prove Theorem 1.2 and Theorem 1.3, which tell us that the eigenvalues of A cannot “crowd” small intervals. In Section 8, we establish regularity properties for the bulk of the spectrum of

![]() $A^{-1}$

. In Section 9, we deploy the approximate negative correlation result (Theorem 1.5) in order to carry out the portion of the proof sketched between (2.9) and (2.12). In Section 10, we establish our base step (2.5) and bootstrap this to prove the near optimal bound (2.13). In the final section, Section 11, we complete the proof of our main Theorem 1.1.

$A^{-1}$

. In Section 9, we deploy the approximate negative correlation result (Theorem 1.5) in order to carry out the portion of the proof sketched between (2.9) and (2.12). In Section 10, we establish our base step (2.5) and bootstrap this to prove the near optimal bound (2.13). In the final section, Section 11, we complete the proof of our main Theorem 1.1.

3 Key definitions and preliminaries

We first need a few notions out of the way, which are related to our paper [Reference Campos, Jenssen, Michelen and Sahasrabudhe4] on the singularity of random symmetric matrices.

3.1 Subgaussian and matrix definitions

Throughout,

![]() $\zeta $

will be a mean

$\zeta $

will be a mean

![]() $0$

, variance

$0$

, variance

![]() $1$

random variable. We define the subgaussian moment of

$1$

random variable. We define the subgaussian moment of

![]() $\zeta $

to be

$\zeta $

to be

A mean

![]() $0$

, variance

$0$

, variance

![]() $1$

random variable is said to be subgaussian if

$1$

random variable is said to be subgaussian if

![]() $ \| \zeta \|_{\psi _2}$

is finite. We define

$ \| \zeta \|_{\psi _2}$

is finite. We define

![]() $\Gamma $

to be the set of subgaussian random variables and, for

$\Gamma $

to be the set of subgaussian random variables and, for

![]() $B>0$

, we define

$B>0$

, we define

![]() $\Gamma _B \subseteq \Gamma $

to be a subset of

$\Gamma _B \subseteq \Gamma $

to be a subset of

![]() $\zeta $

with

$\zeta $

with

![]() $\| \zeta \|_{\psi _2} \leqslant B$

.

$\| \zeta \|_{\psi _2} \leqslant B$

.

For

![]() $\zeta \in \Gamma $

, define

$\zeta \in \Gamma $

, define

![]() $\mathrm {Sym\,}_{n}(\zeta )$

to be the probability space on

$\mathrm {Sym\,}_{n}(\zeta )$

to be the probability space on

![]() $n \times n$

symmetric matrices A for which

$n \times n$

symmetric matrices A for which

![]() $(A_{i,j})_{i \geqslant j}$

are independent and distributed according to

$(A_{i,j})_{i \geqslant j}$

are independent and distributed according to

![]() $\zeta $

. Similarly, we write

$\zeta $

. Similarly, we write

![]() $X \sim \mathrm {Col\,}_n(\zeta )$

if

$X \sim \mathrm {Col\,}_n(\zeta )$

if

![]() $X \in \mathbb {R}^n$

is a random vector whose coordinates are i.i.d. copies of

$X \in \mathbb {R}^n$

is a random vector whose coordinates are i.i.d. copies of

![]() $\zeta $

.

$\zeta $

.

We shall think of the spaces

![]() $\{\mathrm {Sym\,}_n(\zeta )\}_{n}$

as coupled in the natural way: The matrix

$\{\mathrm {Sym\,}_n(\zeta )\}_{n}$

as coupled in the natural way: The matrix

![]() $A_{n+1} \sim \mathrm {Sym\,}_{n+1}(\zeta )$

can be sampled by first sampling

$A_{n+1} \sim \mathrm {Sym\,}_{n+1}(\zeta )$

can be sampled by first sampling

![]() $A_n \sim \mathrm {Sym\,}_n(\zeta )$

, which we think of as the principal minor

$A_n \sim \mathrm {Sym\,}_n(\zeta )$

, which we think of as the principal minor

![]() $(A_{n+1})_{[2,n+1] \times [2,n+1]}$

, and then generating the first row and column of

$(A_{n+1})_{[2,n+1] \times [2,n+1]}$

, and then generating the first row and column of

![]() $A_{n+1}$

by generating a random column

$A_{n+1}$

by generating a random column

![]() $X \sim \mathrm {Col\,}_n(\zeta )$

. In fact, it will make sense to work with a random

$X \sim \mathrm {Col\,}_n(\zeta )$

. In fact, it will make sense to work with a random

![]() $(n+1)\times (n+1)$

matrix, which we call

$(n+1)\times (n+1)$

matrix, which we call

![]() $A_{n+1}$

throughout. This is justified, as much of the work is done with the principal minor

$A_{n+1}$

throughout. This is justified, as much of the work is done with the principal minor

![]() $A_n$

of

$A_n$

of

![]() $A_{n+1}$

, due to the bound (2.1) as well as Lemma 6.1.

$A_{n+1}$

, due to the bound (2.1) as well as Lemma 6.1.

3.2 Compressible vectors

We shall require the now-standard notions of compressible vectors, as defined by Rudelson and Vershynin [Reference Rudelson and Vershynin31].

For parameters

![]() $\rho ,\delta \in (0,1)$

, we define the set of compressible vectors

$\rho ,\delta \in (0,1)$

, we define the set of compressible vectors

![]() $\mathrm {Comp\,}(\delta ,\rho )$

to be the set of vectors in

$\mathrm {Comp\,}(\delta ,\rho )$

to be the set of vectors in

![]() ${\mathbb {S}}^{n-1}$

that are distance at most

${\mathbb {S}}^{n-1}$

that are distance at most

![]() $\rho $

from a vector supported on at most

$\rho $

from a vector supported on at most

![]() $\delta n$

coordinates. We then define the set of incompressible vectors to be all other unit vectors, that is

$\delta n$

coordinates. We then define the set of incompressible vectors to be all other unit vectors, that is

![]() $\mathrm {Incomp\,}(\delta ,\rho ) := {\mathbb {S}}^{n-1} \setminus \mathrm {Comp\,}(\delta ,\rho ).$

The following basic fact about incompressible vectors from [Reference Rudelson and Vershynin31] will be useful throughout:

$\mathrm {Incomp\,}(\delta ,\rho ) := {\mathbb {S}}^{n-1} \setminus \mathrm {Comp\,}(\delta ,\rho ).$

The following basic fact about incompressible vectors from [Reference Rudelson and Vershynin31] will be useful throughout:

Fact 3.1. For each

![]() $\delta ,\rho \in (0,1)$

, there is a constant

$\delta ,\rho \in (0,1)$

, there is a constant

![]() $c_{\rho ,\delta } \in (0,1)$

, so that for all

$c_{\rho ,\delta } \in (0,1)$

, so that for all

![]() $v \in \mathrm {Incomp\,}(\delta ,\rho )$

, we have that

$v \in \mathrm {Incomp\,}(\delta ,\rho )$

, we have that

![]() $|v_j|n^{1/2} \in [ c_{\rho ,\delta }, c_{\rho ,\delta }^{-1}]$

for at least

$|v_j|n^{1/2} \in [ c_{\rho ,\delta }, c_{\rho ,\delta }^{-1}]$

for at least

![]() $c_{\rho ,\delta } n$

values of j.

$c_{\rho ,\delta } n$

values of j.

Fact 3.1 assures us that for each incompressible vector, we can find a large subvector that is “flat.” Using the work of Vershynin [Reference Vershynin46], we will safely be able to ignore compressible vectors. In particular, [Reference Vershynin46, Proposition 4.2] implies the following lemma. We refer the reader to Appendix XII for details.

Lemma 3.2. For

![]() $B>0$

and

$B>0$

and

![]() $\zeta \in \Gamma _B$

, let

$\zeta \in \Gamma _B$

, let

![]() $A \sim \mathrm {Sym\,}_n(\zeta )$

. Then there exist constants

$A \sim \mathrm {Sym\,}_n(\zeta )$

. Then there exist constants

![]() $\rho ,\delta ,c \in (0,1) $

, depending only on B, so that

$\rho ,\delta ,c \in (0,1) $

, depending only on B, so that

and

The first statement says, roughly, that

![]() $A^{-1} u$

is incompressible for each fixed u; the second states that all unit eigenvectors are incompressible.

$A^{-1} u$

is incompressible for each fixed u; the second states that all unit eigenvectors are incompressible.

Remark 3.3 (Choice of constants,

$\rho ,\delta ,c_{\rho ,\delta }$

).

$\rho ,\delta ,c_{\rho ,\delta }$

).

Throughout, we let

![]() $\rho ,\delta $

denote the constants guaranteed by Lemma 3.2 and

$\rho ,\delta $

denote the constants guaranteed by Lemma 3.2 and

![]() $c_{\rho ,\delta }$

the corresponding constant from Fact 3.1. These constants shall appear throughout the paper and shall always be considered as fixed.

$c_{\rho ,\delta }$

the corresponding constant from Fact 3.1. These constants shall appear throughout the paper and shall always be considered as fixed.

Lemma 3.2 follows easily from [Reference Vershynin46, Proposition 4.2] with a simple net argument.

3.3 Notation

We quickly define some notation. For a random variable X, we use the notation

![]() $\mathbb {E}_X$

for the expectation with respect to X and we use the notation

$\mathbb {E}_X$

for the expectation with respect to X and we use the notation

![]() $\mathbb {P}_X$

analogously. For an event

$\mathbb {P}_X$

analogously. For an event

![]() $\mathcal {E}$

, we write

$\mathcal {E}$

, we write

![]() ${\mathbf {1}}_{\mathcal {E}}$

or

${\mathbf {1}}_{\mathcal {E}}$

or

![]() ${\mathbf {1}} \{ \mathcal {E}\}$

for the indicator function of the event

${\mathbf {1}} \{ \mathcal {E}\}$

for the indicator function of the event

![]() $\mathcal {E}$

. We write

$\mathcal {E}$

. We write

![]() $\mathbb {E}^{\mathcal {E}}$

to be the expectation defined by

$\mathbb {E}^{\mathcal {E}}$

to be the expectation defined by

![]() $\mathbb {E}^{\mathcal {E}}[\, \cdot \, ] = \mathbb {E}[\, \cdot \, {\mathbf {1}}_{\mathcal {E}}]$

. For a vector

$\mathbb {E}^{\mathcal {E}}[\, \cdot \, ] = \mathbb {E}[\, \cdot \, {\mathbf {1}}_{\mathcal {E}}]$

. For a vector

![]() $v \in \mathbb {R}^{n}$

and

$v \in \mathbb {R}^{n}$

and

![]() $J \subset [n]$

, we write

$J \subset [n]$

, we write

![]() $v_J$

for the vector whose ith coordinate is

$v_J$

for the vector whose ith coordinate is

![]() $v_i$

if

$v_i$

if

![]() $i \in J$

and

$i \in J$

and

![]() $0$

otherwise.

$0$

otherwise.

We shall use the notation

![]() $X \lesssim Y$

to indicate that there exists a constant

$X \lesssim Y$

to indicate that there exists a constant

![]() $C>0$

for which

$C>0$

for which

![]() $X \leqslant CY$

. In a slight departure from convention, we will always allow this constant to depend on the subgaussian constant B, if present. We shall also let our constants implicit in big-O notation to depend on B, if this constant is relevant in the context. We hope that we have been clear as to where the subgaussian constant is relevant, and so this convention is to just reduce added clutter.

$X \leqslant CY$

. In a slight departure from convention, we will always allow this constant to depend on the subgaussian constant B, if present. We shall also let our constants implicit in big-O notation to depend on B, if this constant is relevant in the context. We hope that we have been clear as to where the subgaussian constant is relevant, and so this convention is to just reduce added clutter.

4 Quasi-randomness properties

In this technical section, we define a list of “quasi-random” properties of

![]() $A_n$

that hold with probability

$A_n$

that hold with probability

![]() $1-e^{-\Omega (n)}$